[ad_1]

Francesca Sweet, Head of iPhone Product Line, and Jon McCormack, Vice President of Apple Camera Software Engineering, gave a new interview to PetaPixel, offering an overview of Apple’s approach to camera design and development. Basically, executives explained that Apple sees camera technology as a holistic union of hardware and software.

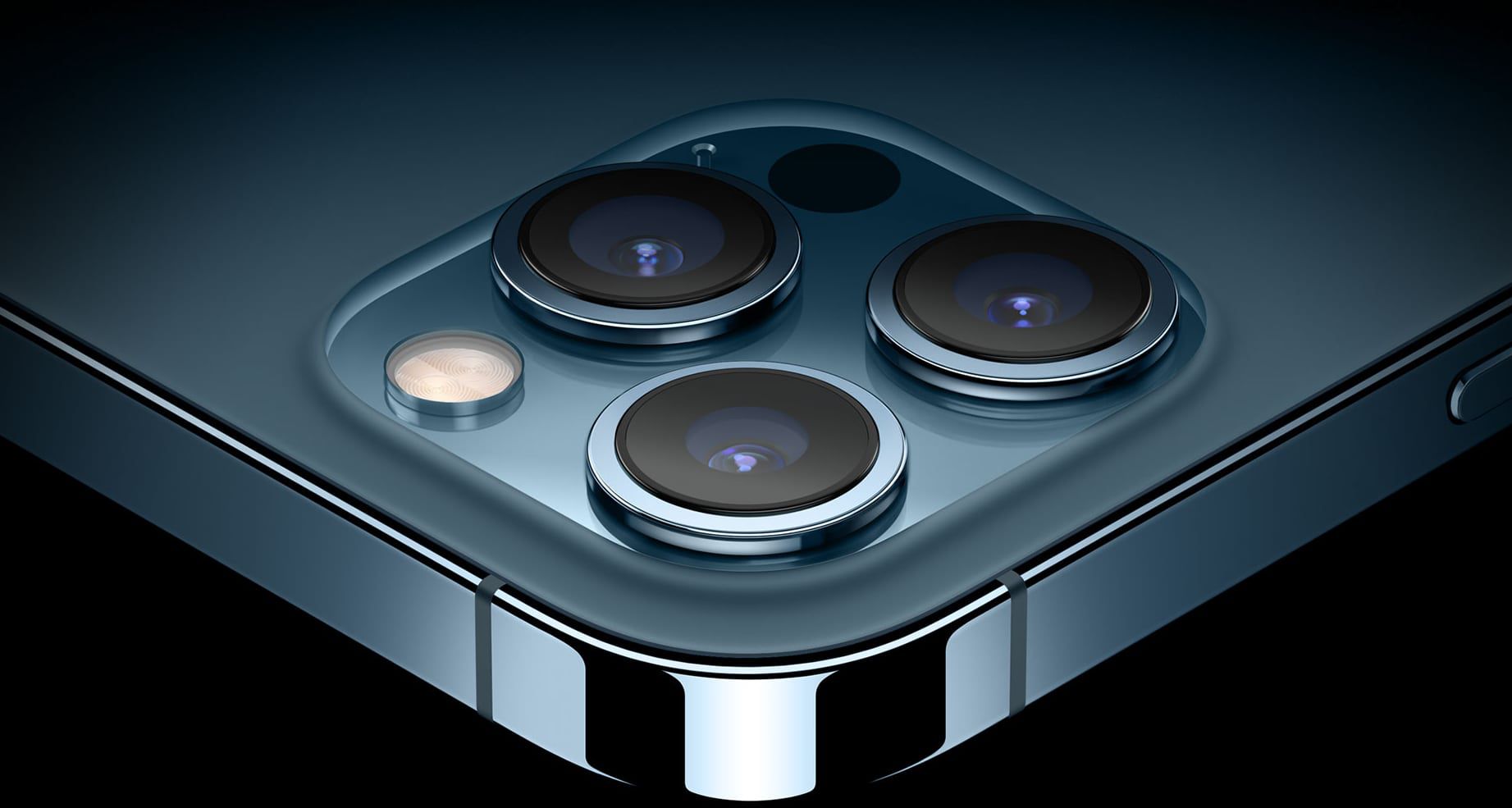

/article-new/2020/10/iphone12protriplelenscamera.jpg?lossy)

Interview reveals that Apple considers its primary goal for smartphone photography to be to allow users to “stay in the moment, take a great photo, and get back to what they’re doing” without being distracted by the technology behind it.

McCormack explained that while professional photographers go through a process to refine and edit their photos, Apple attempts to refine that process down to the mere act of capturing an image.

“We reproduce as much as possible what the photographer will be doing on post,” said McCormack. “There are two aspects to taking a photo: the exposure, and how you develop it afterwards. We use a lot of computational photography for exposure, but increasingly post and do it automatically for you. . The point of this is to make photographs that appear larger than life, to reproduce what it was like to actually be there. “

He then described how Apple uses machine learning to break down scenes into natural parts for computer image processing.

“The background, the foreground, the eyes, the lips, the hair, the skin, the clothes, the sky. We are handling this all independently as you would in Lightroom with a bunch of local adjustments,” a McCormack continued. “We adjust everything from exposure, contrast and saturation, and combine them all together … We understand what food looks like, and we can optimize color and saturation accordingly much more faithfully.

Skies are notoriously hard to come by, and Smart HDR 3 allows us to segment the sky and process it completely independently, then mix it up to more faithfully recreate what it was like to actually be there. “

McCormack explained why Apple chose to include Dolby Vision HDR video capability in the iPhone 12 lineup.

“Apple wants to untangle the tangled industry that is HDR, and how they do it is leading with very good content creation tools. It ranges from producing HDR video which was niche and complicated because it had to be. expensive giant cameras and a video suite to make, so far my 15 year old daughter can create full Dolby Vision HDR video, so there will be a lot more Dolby Vision content. It is in the best interests of the industry to create more support. “

Executives also discussed the hardware enhancements for the iPhone 12 and iPhone 12 Pro camera, noting that “the new wide camera, improved image fusion algorithms, reduce noise and improve details ”. The specific camera advancements of the iPhone 12 Pro Max were also an area of interest:

“With the Pro Max, we can extend that even more, because the larger sensor allows us to capture more light in less time, which allows for better freezing of motion at night.

When asked why Apple chose to increase the sensor size only with the iPhone 12 Pro Max, McCormack revealed Apple’s point of view:

“It’s not as meaningful for us anymore to talk about a particular speed and power of an image or a camera system,” he said. “When we create a camera system, we think about all of these things and then we think about all we can do on the software side … You can of course go for a bigger sensor, which has factor issues. form, or you can look at a whole system to ask if there are other ways to accomplish this. We think about what the goal is, and the goal is not to have a bigger sensor that we can brag about. The goal is to ask how we can take better photos in more conditions people find themselves in. It was this reflection that caused the deep fusion, night mode and time image signal processing. “

Read the full interview with Sweet and McCormack on PetaPixel.

[ad_2]

Source link