[ad_1]

Something to look forward to: AMD issued its first patent on Chiplet GPU designs. In typical AMD fashion, they’re trying not to tip the boat. Chiplet GPUs are just beginning to emerge. Intel has been candid about its development process and has confirmed the use of chips in its first generation discrete GPUs. Nvidia, while shy about the details, has published numerous research papers on the subject. AMD was the last to stick – which only adds to the intrigue.

Chips, as the name suggests, are smaller, less complex chips meant to work together in more powerful processors. They are arguably the inevitable future of all high performance components and, in some cases, the successful present; AMD’s use of chip processor designs has been brilliant.

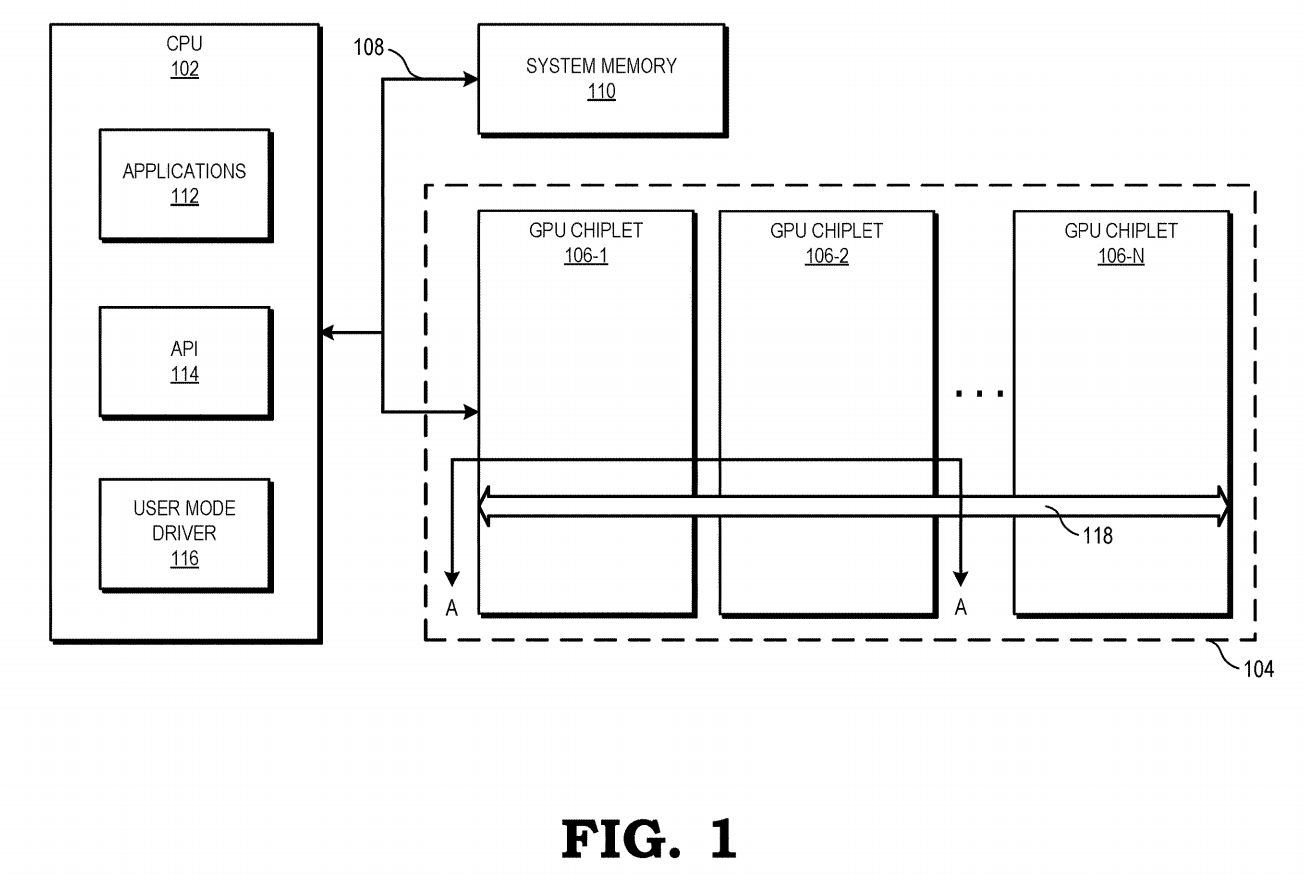

In the new patent dated December 31, AMD describes a chip design designed to closely mimic a monolithic design. Their hypothetical model uses two chips connected by a high speed inactive interposer called crosslinking.

There is a cross connection between the L2 cache and the L3 cache on the memory hierarchy. Anything below, like cores and L1 cache and L2 cache, is aware of their separation from the other chiplet. All of the above, including L3 cache and GDDR memory, are shared among chiplets.

This design is advantageous because it is conventional. AMD claims that compute units can access the low-level cache on other chiplets almost as quickly as they can access the local low-level cache. If this turns out to be true, the software will not need to be updated.

The same can’t be said for Intel’s and Nvidia’s designs. Intel intends to use two new technologies, EMIB (Embedded Multi-Pu Interconnect Bridge) and Foveros. The latter is an active interposer that uses vias via silicon, which AMD explicitly states do not use. Intel’s design allows the GPU to host a system accessible cache that powers new memory fabric.

Nvidia hasn’t disclosed everything, but has pointed out a few directions to follow. A 2017 research paper describes a four-chip design and location-sensitive architecture and non-uniform memory access (NUMA) memory access. It is also experimenting with a new L1.5 cache, which exclusively contains remote data accesses and is bypassed during local memory accesses.

AMD’s approach may seem the least imaginative, but it also seems practical. And if history has proven anything, it’s that developer friendliness is a huge plus.

Additional diagrams of the patent are shown below.

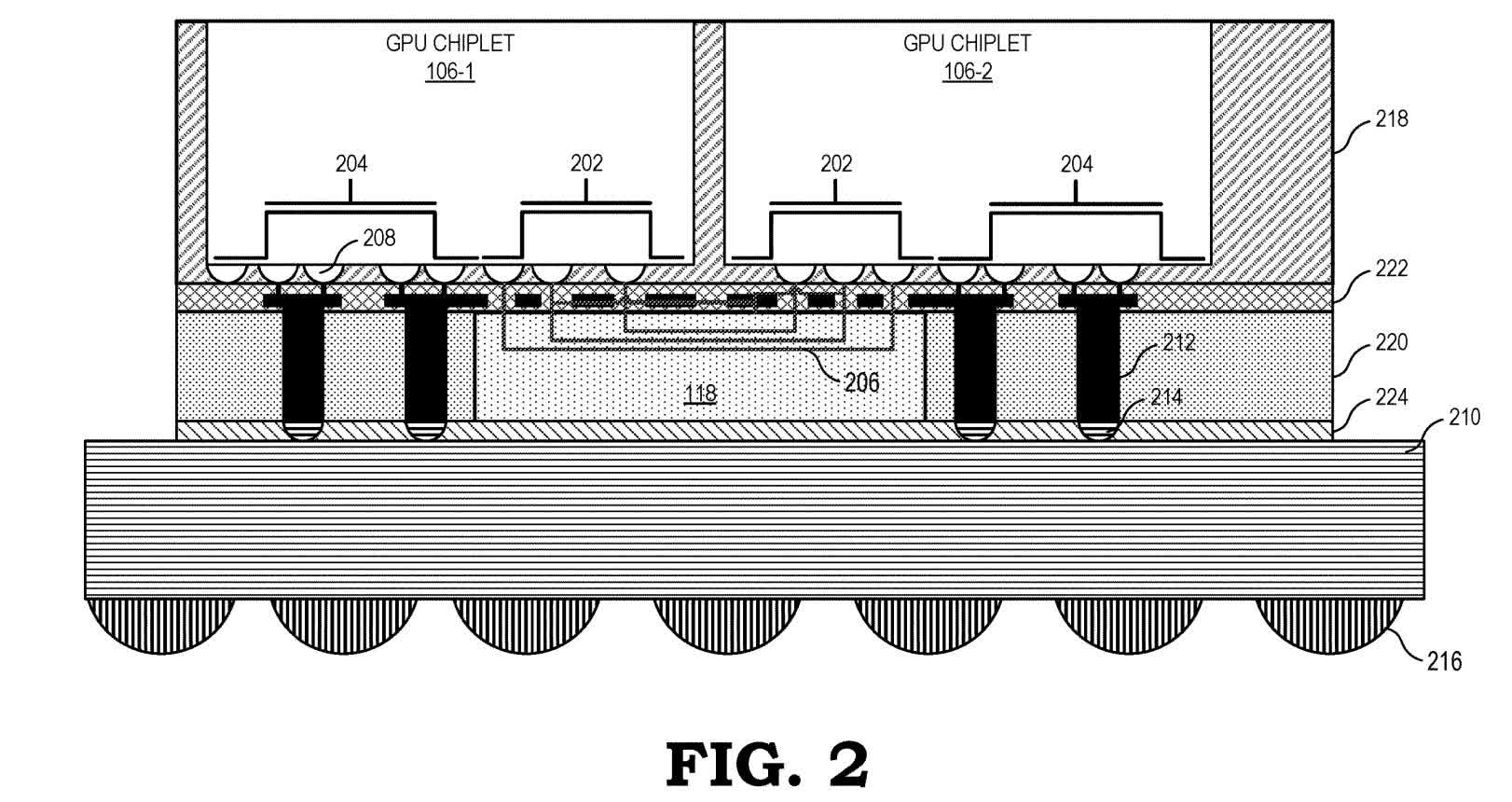

Figure 2 is a sectional view that descends from two chips to the printed circuit board. The two chips (106-1 and 106-2) are stacked vertically on the passive crosslinker (118) and use dedicated conductive structures to access the crosslink traces (206) and then communicate with each other. Conductive structures not attached to the crosslinker (204) connect to the printed circuit board for power and other signals.

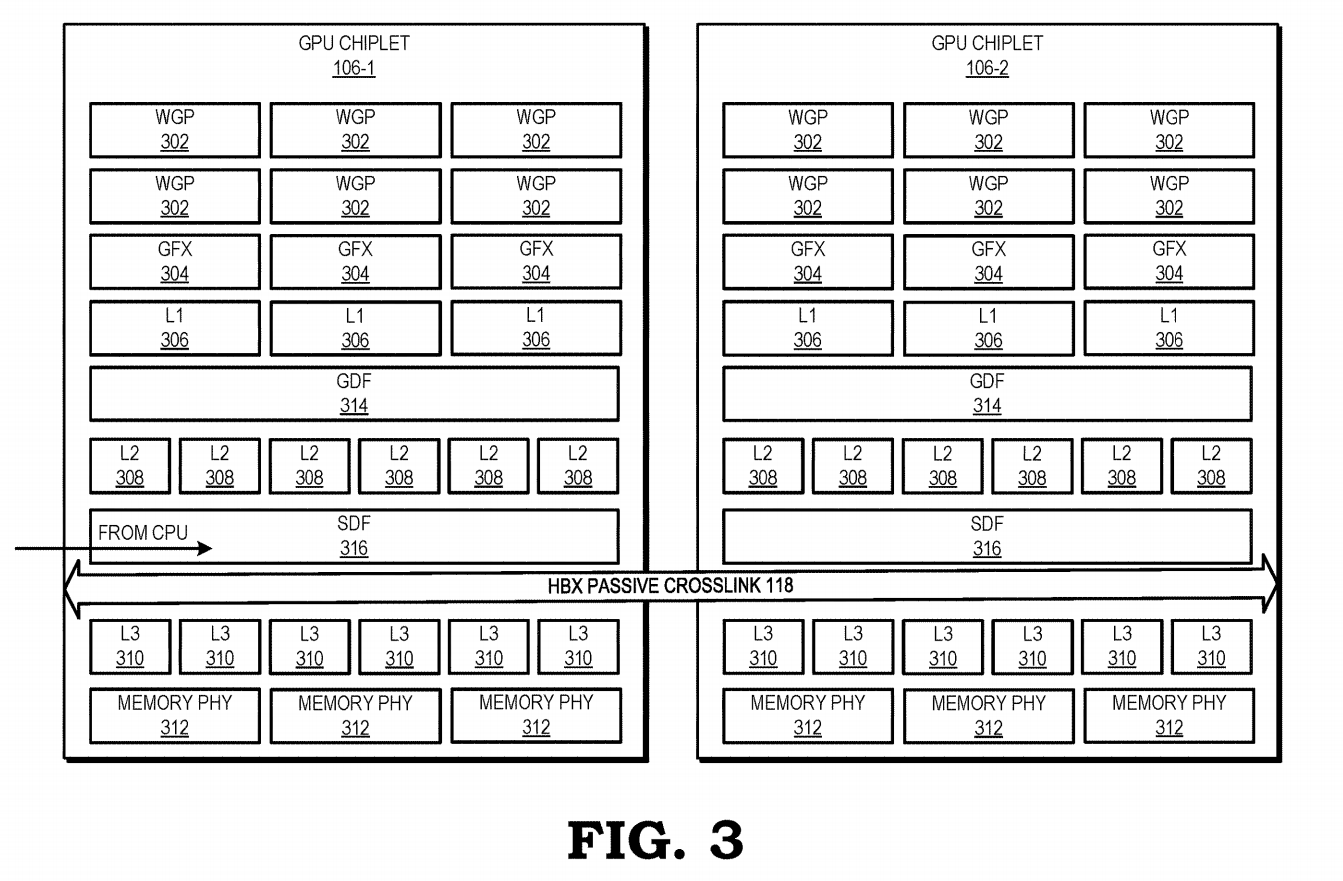

Figure 3 illustrates the cache hierarchy. WGP (Workgroup Processors) (302), which are collections of shader cores, and GFX (Fixed Functional Units) (304), which are dedicated singular-purpose processors, connect directly to the L1 cache. of a channel (306). Each chiplet contains multiple L2 cache banks (308) which are individually addressable, and also consistent in a single chiplet. Each chiplet also contains multiple L3 cache (310) cache banks that are consistent across the GPU.

Graphics Data Fabric (GDF) (314) connects L1 cache stores to L2 cache stores. The SDF (scalable data fabric) (316) combines the L2 cache banks and connects them to the crosslink (118). The crosslink connects to SDFs on all chiplets, as well as L3 cache banks on all chiplets. GDDR memory lanes (written as PHY memory) (312) connect to L3 cache banks.

As an example, if a WGP on one chiplet required data from one GDDR store on another chiplet, that data would be sent through an L3 cache store, then cross-link to an SDF, then to an L2 store. , and finally, via a GDF to an L1 bank.

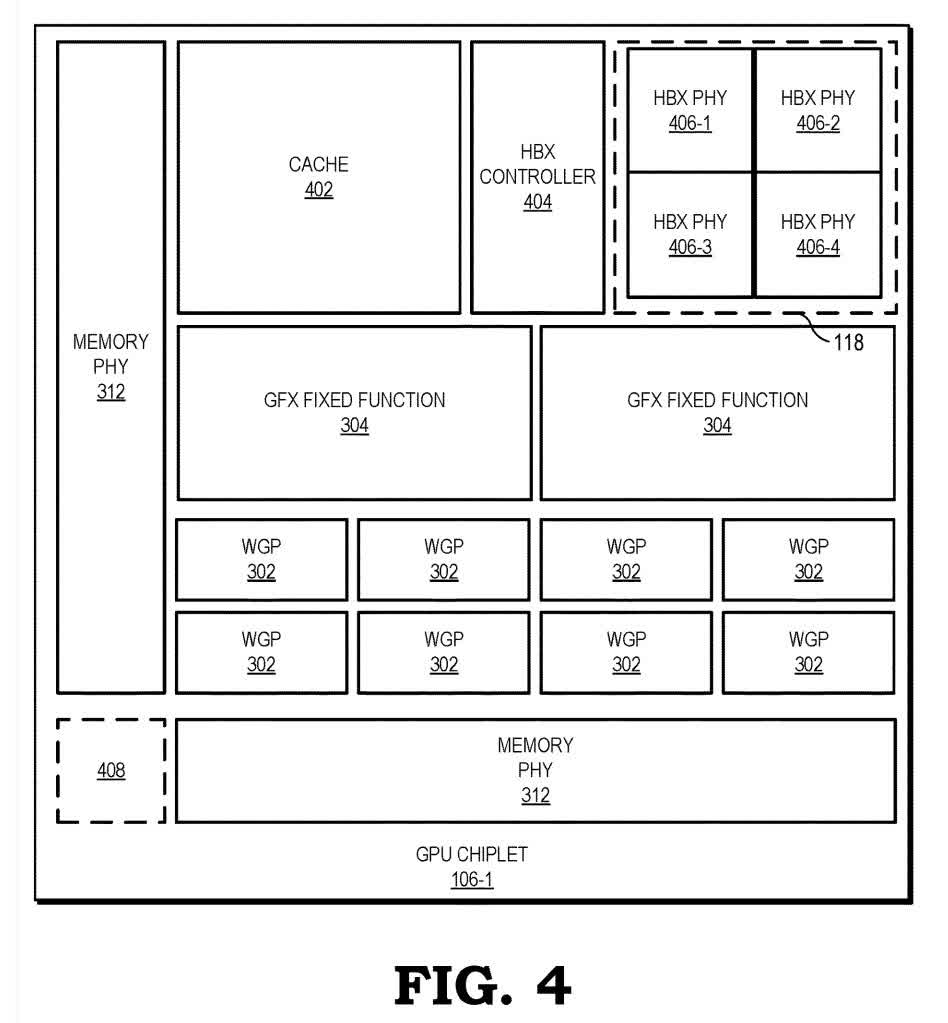

Figure 4 is a bird’s eye view of a chiplet. It more accurately shows the locations and potential scales of various components. The HBX controller (404) manages crosslinking, to which the chiplet is connected by HBX PHY conductors (406). The small square in the lower left corner (408) is a potential additional crosslink connection to connect more chips.

[ad_2]

Source link