[ad_1]

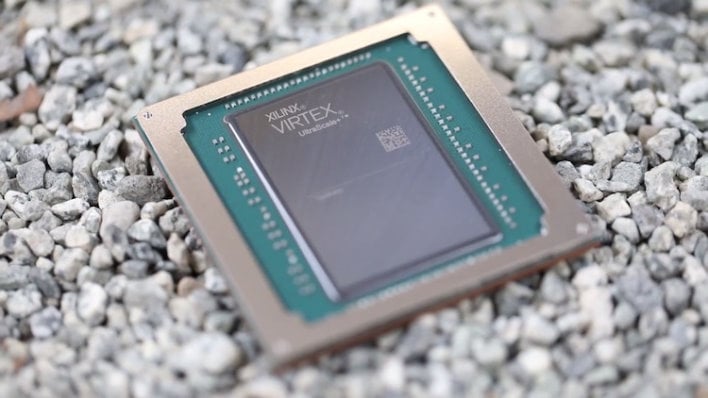

While they often don’t perform as well as processors alone, FPGAs can do a terrific job speeding up specific tasks. Whether it’s speeding up as a fabric for large-scale data center services that improve AI performance, an FPGA in the hands of a skilled engineer can offload a wide variety of tasks from a processor and speed up processes. Intel has been talking about a big game about integrating Xeons with FPGAs over the past six years, but it hasn’t resulted in a single product in its lineup. A new patent from AMD, however, could mean the newcomer FPGA might be ready to make one.

In October, AMD announced plans to acquire Xilinx as part of a big push into the data center. On Thursday, the United States Patent and Trademark Office (USPTO) issued an AMD patent for integrating programmable threads with a processor. AMD made 20 claims in its patent application, but the bottom line is that a processor can include one or more execution units that can be programmed to handle different types of custom instruction sets. This is exactly what an FPGA does. It may be a while before we see any products based on this design, as it seems a bit too early to be part of the processors included in the recent EPYC leaks.

While AMD has made waves with its chip designs for the Zen 2 and Zen 3 processors, that doesn’t appear to be what’s going on here. The programmable unit of AMD’s FPGA patent actually shares registers with the floating point and integer execution units of the processor, which would be difficult, or at least very slow, if they are not on the same package. This type of integration should make it easy for developers to integrate these custom instructions into applications, and the processor would simply be able to pass them to the on-processor FPGA. These programmable units can handle atypical data types, especially FP16 (or half-precision) values used to accelerate AI learning and inference.

In the case of multiple programmable units, each unit could be programmed with a different set of specialized instructions, so that the processor could speed up multiple sets of instructions, and these programmable UEs could be reprogrammed on the fly. The idea is that when a processor loads a program, it also loads a binary file that configures the programmable thread to speed up certain tasks. The CPU’s own decoding and distribution unit could address the programmable unit, passing those custom instructions for processing.

AMD has been working for years on different ways to speed up AI calculations. First, the company announced and released the Radeon Impact series of artificial intelligence accelerators, which were just large, headless Radeon GPUs with custom drivers. The company doubled that with the release of the MI60, its first 7nm GPU before the launch of the Radeon RX 5000 series, in 2018. It makes sense to focus on AI through FPGAs after the acquisition of Xilinx, and we are delighted to see what the company offers.

[ad_2]

Source link