[ad_1]

The world is inundated with Covid-19 data. Did this information help?

A new study examines how public knowledge, risk perception and behavioral intentions have been influenced by six different maps showing the prevalence of Covid-19 in the United States on May 11, 2020.

The result.

Having data on the number and distribution of Covid-19 cases has had very little effect overall. In fact, respondents in a control group, who received no data at all, performed better on a test of knowledge about the current state of the epidemic than respondents who provided data.

The idea for this study was great, capitalizing on a variety of data visualizations provided by a range of credible sources at a time when the public was showing a lot of interest.

The cards.

To measure the association of different ways of visualizing spatial data with viewers’ knowledge, risk perceptions, and behavioral intentions, the researchers selected six different maps that varied based on their attributes. Some reported data at the state level while others were at the county level.

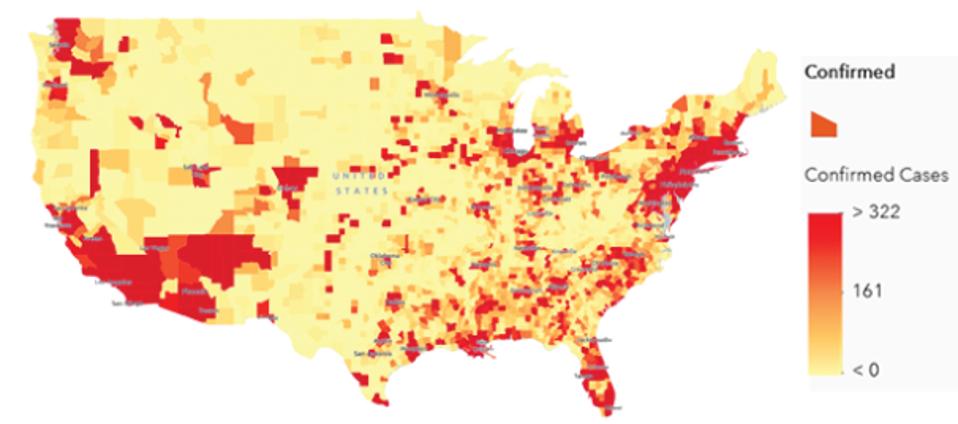

Map produced by researchers at Johns Hopkins University shows prevalence of Covid-19 in United States … [+]

Modified from https://jamanetwork.com/journals/jamanetworkopen/fullarticle/2774639.

Some have used “bubbles” to illustrate the scale of the outbreak, while others have used a color gradient called a “heat map”.

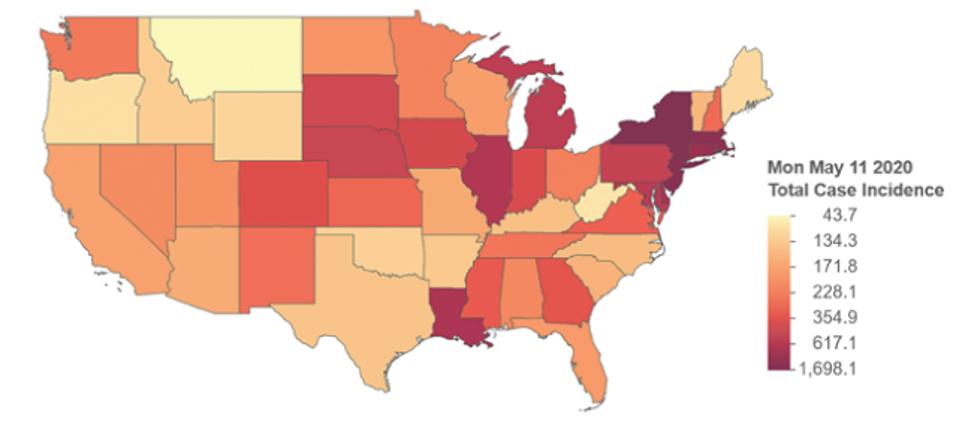

A map produced by the Kaiser Family Foundation shows the prevalence of Covid-19 in the United States using a … [+]

Modified from https://jamanetwork.com/journals/jamanetworkopen/fullarticle/2774639.

Finally, some maps were expressed in terms of the total number of cases and others in terms of the number of cases per capita.

Map produced by researchers at the University of California at Davis shows incidence of Covid-19 … [+]

Modified from https://jamanetwork.com/journals/jamanetworkopen/fullarticle/2774639.

Respondents to the survey received only one of these cards and were tested on their knowledge of the total number of cases and cases per capita, the perception of individual and societal risk and their intentions regarding compliance with the Covid-guidelines. 19.

The results.

Prevalence maps (cases per capita) translate poorly into knowledge of the total number of cases. Knowledge of the total number of cases was greater among respondents who had viewed maps showing the total number of cases than among cases per capita. This is not surprising. What is surprising is the magnitude of the difference.

Certain types of maps have significantly improved knowledge of prevalence:

- Heat maps (also called choropleths) work better than bubble cards. This is interesting because choropleths tend to downplay the importance of large cities over rural areas. Small areas with high prevalence can be difficult to see on the map.

- State level maps work better than county level maps. Maybe it’s because there are fewer states than counties. To understand the whole thing, you have to “add” the different states, or apply another mental process that approximates a sum. Without a doubt, this is easier to do with 48 states displayed versus 3,108 county and county equivalents. (Alaska and Hawaii are excluded from the maps.) This is particularly interesting because the county data is more accurate, at least in the sense that it provides a more accurate description of where cases are concentrated. (So the usual advice on using the smallest area units for choropleths may not be the right advice in this case. Perhaps the state level maps in this case overcome the disadvantages of choropleths compared to to small areas such as urban counties.)

- Per capita maps work better than total case counts. (Not surprising.)

Viewing maps of any kind had no impact on individual risk perception. There was no indication that any aspect of the presentation of the data, or even the presentation of the data itself, had an effect on the individual perception of risk. It’s discouraging. Since there was nothing wrong with the data itself (but see more on that below), the reason is something that needs to be explored further.

The display of the maps made viewers slightly more optimistic about the societal risk. Perception of societal risk – measured in terms of optimism or pessimism about improving epidemic conditions within two weeks – was weakly influenced by the presentation of a card. What kind of card didn’t matter. Interestingly, respondents who viewed a map were more optimistic than respondents who were not provided with any data.

The visualization of the maps had no influence on the behavior. There was no evidence of any effect of the data on intention to comply with protection guidelines.

Viewing the maps led to less precise knowledge of the total number of cases than no information at all.

These results are striking. In particular, they suggest that access to information of a type widely disseminated during the first months of the Covid-19 pandemic had virtually no impact on public understanding of the epidemic. Even more striking is the counterintuitive result that sometimes being presented with data was worse than not being presented with data at all.

What explains this and is it important?

The explanation.

The particularly surprising discovery was the latest, that viewing maps seemed to lead to reduction in knowledge. My guess is that this is not a general outline, but an outcome of the study design. We know from the details reported in the study (the first major finding above) that respondents presented prevalence (i.e. maps of the number of cases per capita) answered questions total number cases worse than those whose maps show the total number of cases. It’s logic. Even if you fully understand what information is presented in prevalence maps, unless you know where the population is concentrated (either because you know enough about the geography of the United States, or because maps the size of the United States). population are also presented to you), is no way to infer the total number of cases. One could even assume that the information presented by the prevalence maps is damaging knowing the total number of cases, because it’s misleading, because it’s a measure of something very different.

Respondents who receive prevalence cards are therefore expected to demonstrate less knowledge of all cases. These prevalence maps included two of the six maps presented. For example, about a third of the respondents who were presented with cards received harmful information. If the difference between no information (no map presented) and good information (a map of the total number of cases) is negligible, then the difference between the group without information (no map) is to be expected. presented) and a group with contaminated information (two-thirds have good information and one-third harmful information), would be marginal, but negative. This is in fact what the researchers found.

What have we learned?

First, the raw data had a minimum value for the average respondent. Second, scientists, data journalists, bloggers and others who store and present data to the public should be aware that the way data is presented has perceptual consequences (for example, heat maps or heat maps. bubbles). Last but not least, there is still considerable scope for improving general knowledge of how to interpret quantitative data and information, and how to design optimal visualizations. The differences between those with and without access to the data – in knowledge, risk perception and behavioral intention – were small. But they should be big. The data should be informative, especially with respect to a situation, such as the Covid-19 pandemic, where there is a lot of confusion and uncertainty. In a society that increasingly relies on data to make important decisions, it is essential that people know the interpretation of charts and numbers.

Source link