[ad_1]

Rob Blackie is a digital strategist based in London, England, who has contributed to The Guardian and The Independent newspapers.

- Brandwatch reverses the situation by buying Qriously, a market research firm

- Meet European startups who launched EF's 11th Demo Day in London

Facebook had a terrible couple of years. False news. Cambridge Analytica. Charges of anti-Semitism. Russia hacks the election of 2016. Racist memes, murders and lynchings in India, Myanmar and Sri Lanka.

And Facebook is only the technological society that has the longest list of scandals. We must also take into account the well-documented roles of Google, YouTube and Twitter in radicalization, not to mention the growing global health crises caused by medical misinformation spread on all major platforms.

Investors are beginning to worry rightly. If technology companies and their investors can neither anticipate nor stop these problems, it will probably result in damaging regulation, costing them billions.

The rest of us are more and more dissatisfied with the fact that the internet giants refuse to take their responsibilities. The argument that the problem lies in the misuse of their tools by a third party carries little harm, not only with the media and politicians, but more and more with the public.

If technology giants do not want regulators to intervene and control the police, they must do much more to anticipate and stop abuses, even before that happens.

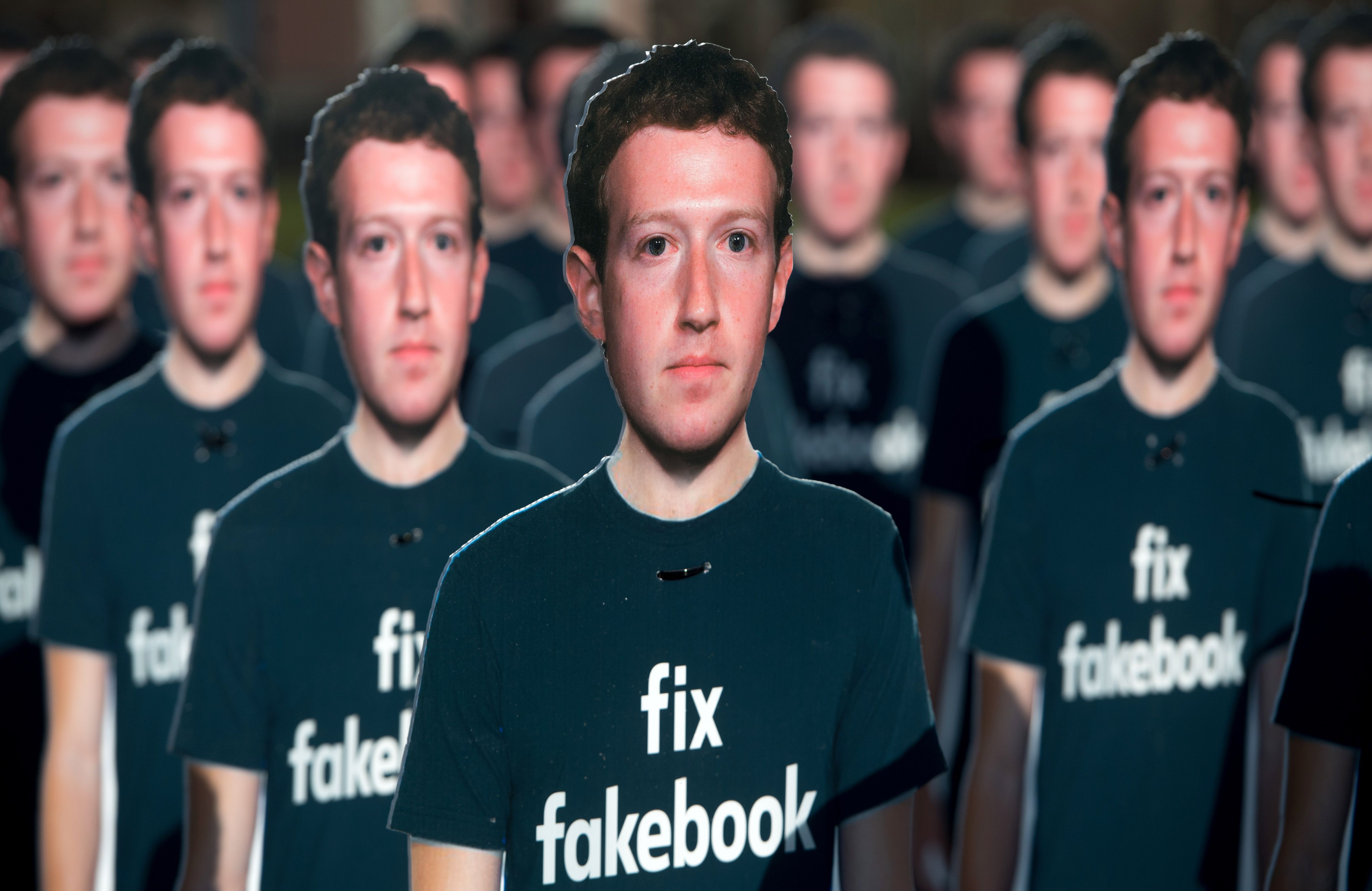

On April 10, 2018, Mark Zuckerberg, founder and CEO of Facebook, is in front of the US Capitol.

The Avaaz advocacy group has drawn attention to what the group claims are hundreds of millions of fake accounts still spreading misinformation on Facebook. (Photo: SAUL LOEB / AFP / Getty Images)

The common factor in social media scandals

The above mentioned issues have not been caused by anyone who violates the existing social network rules. They also did not talk about data piracy, in the true sense of "hacking" that involves data theft.

These scandals are best understood as a campaign of political defamation. If you have attended a good smear campaign, you will know smears are funny, interesting and shareable. Just like a good one.

US President Lyndon Johnson was an expert in defaming his opponents. As Hunter S Thompson reported:

"The race was tight and Johnson was starting to worry. Finally, he asked his campaign manager to launch a large-scale campaign about his opponent's long habit of enjoying the carnal knowledge of his barnyard sows.

"My God, we can not afford to call him a pig," protested the campaigner. "Nobody will believe such a thing."

"I know," answered Johnson. "But let the sonofab deny it."

The foundations of a good smear campaign are at the root of many abuses seen on Facebook today. Sometimes the pernicious story contains a core of truth. Sometimes it's totally nil. But that misses the point.

For a smear to work, it must simply be shared. Facebook, YouTube and Twitter reward all shared content. <

More sharing, more people touching directly – that's the very nature of social media.

That's where the problem lies. A fun and shocking (shareable) story is more likely to be recommended by YouTube, less expensive for advertising, and better indexed on Google.

Of course, this has been understood for years. You can even buy guides for this.

Ryan Holliday's hilarious and disturbing sentence, Trust Me I Lie, explains how he would graffle his own client's posters at night to create controversy and possibility of sharing. Holliday would then anonymously share photos of these altered posters in Facebook groups, forums, and Twitter to stir up fights, encouraging both sides to get scammed.

Outrage involved a lot of sharing on social networks and could even be a stepping stone for the national media.

Nothing that Holliday did was illegal, or even violated the rules of the social network. But it was clearly an abuse of social networks, and ultimately detrimental to society, because it created controversy where it did not exist before. What would you prefer the company to focus on? Schools, jobs, hospitals or the latest explosion of social media?

In addition to generating controversy, many technical tips will help you get your smears taken off. And social networks are good at finding things like fake profiles, data scraping and traditional hacking.

But new problems are constantly being created, and for regulators it appears that technology companies are not learning from their mistakes.

Image courtesy of TechCrunch / Bryce Durbin

Why is it?

Technology companies simply have the wrong culture to solve this type of problem. Technologists inevitably focus on technical abuse. Using armies of fact checkers is an important answer to the false news, but ask any political strategist how to fight a smear. They will not say "counter-current with the truth" because it can ensnare the lie rather than stop it. Consider politicians and pigs.

This is a different kind of abuse. Almost all the major abuses mentioned here involve that someone uses the tools in an unexpected way by Facebook.

Facebook has built an incredible engine to use known data about people and improve advertising targeting. But this same engine can also be used by companies more commonly badociated with military-style psychological warfare against populations and armies.

Technology companies will always have social problems. Engineers have a devious type of mind different from that of political strategists and online scammers.

Fortunately, we already have a model for this.

White hat hackers have been around for decades. Internet giants reward "friendly" hackers, and pay them to help them find and then help fill security gaps.

Image courtesy of TechCrunch / Bryce Durbin

Our proposal: "White hat" Cambridge Analytica

All social networks need to do is start paying bonuses to the most devious social and political strategists in the world. People who would otherwise use social networks for shady customers.

Pay them to find weaknesses in Facebook, just as tech companies pay bonuses for revealing technical flaws in software.

Everybody wins. Facebook could solve problems before they are widely exploited. And as a bonus, they would divert some consultants from an almost criminal life. Facebook investors would be rebadured by fewer scandals and fewer risks of costly regulations.

And the rest of us would benefit from Facebook starting to act at its age.

Source link