[ad_1]

It is almost inevitable to code biases in machine learning models, and in general in the concepts we call AI, but we can certainly do better than we have done in previous years. IBM hopes that a new database of one million faces more representative of those in the real world will help.

Face recognition is used for everything from unlocking your phone to your door, estimating your mood or your likelihood of committing crimes – and we may as well admit that many of these apps are superfluous. But even good ones often fail with simple tests like working properly with people of certain skin tones or certain ages.

This is a multi-level problem, one of the most important of which is that many developers and creators of these systems do not think, let alone audit, a failure of representation in their data.

It's something everyone needs to work harder, but the actual data is also important. How to form a computer vision algorithm to work well with everyone if there is not a data set that contains everyone?

Each set will necessarily be limited, but creating a system that brings together enough participants and is never systematically excluded is a laudable goal. And with its new set Diversity in Faces (DiF), that's what IBM has been trying to create. As the paper introducing the set reads as follows:

For facial recognition to work the way you want – to be both accurate and fair – the training data must provide sufficient balance and coverage. The training datasets must be large enough and diverse enough to understand the many differences between faces. The images must reflect the diversity of the features of the faces we see in the world.

The faces come from a huge dataset of 100 million images (Flickr Creative Commons), through which another machine learning system has prowled and found as many faces as possible. These were then isolated and cropped, and that's when the real work began.

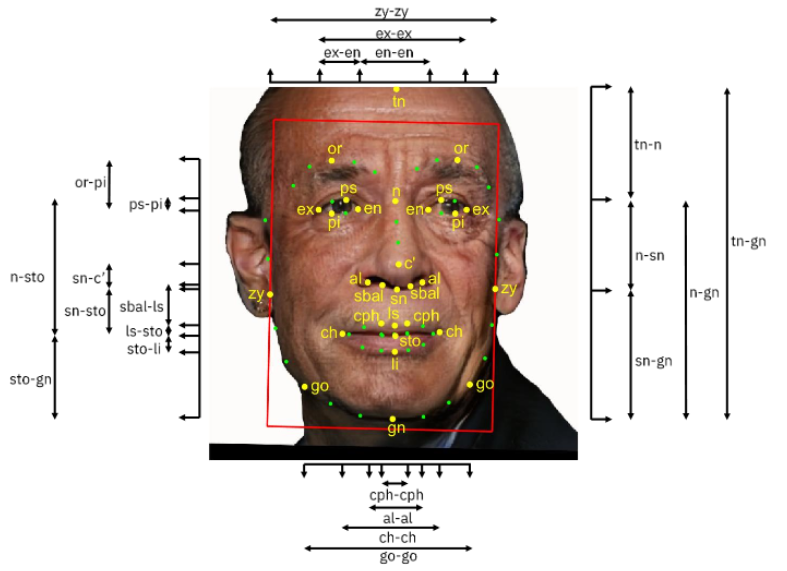

These sets are supposed to be ingested by other machine learning algorithms. They must therefore be both diversified and accurately labeled. Thus, the DiF set has a million faces and each is accompanied by metadata describing elements such as the distance between the eyes, the size of the forehead, and so on. All these combined measures create the "mask" that a system would use, for example, to match one image to another of the same person.

But any of these measures may or may not be good for identifying people, or accurate for a given ethnic group, or what you have. The IBM team has therefore developed a revised set that includes not only simple elements, such as the distances between functions, but also the relationship between these measurements, for example the relationship between this area above eyes and the one under the nose. The color of the skin, as well as the contrast and types of staining, are also included.

In a long-awaited move, the genre as a whole is detected and coded according to a spectrum, not a binary one. Since the genre itself is non-binary, it makes sense to represent it as any fraction between 0 and 1. So you have a metric describing how individuals are on a scale from female to male.

Age is also automatically estimated, but for these last two values, a kind of "reality check" is also included in the form of a field "subjective annotation" in which it is asked people to label the faces of men or women and guess their age. Here, there may be a re-encoded bias, since sources of supply of human origin tend to introduce it. All of these elements constitute a considerably larger set of measures than any other face recognition training package available to the public.

You may be wondering why race or ethnicity is not a category. IBM's John R. Smith, who led the creation of the set, explained to me in an email:

Ethnicity and race are often used interchangeably, although the former is more related to culture and the latter to biology. The boundaries between the two are not distinct and the labeling is highly subjective and noisy, as in previous work. Instead, we have chosen to focus on coding schemes that can be reliably determined and with a kind of continuous scale that can feed into the badysis of diversity. We can go back to some of these subjective categories.

However, even with a million faces, there is no guarantee that this set is sufficiently representative – a sufficient number of groups and subsets is present to avoid bias. In fact, Smith seems certain that this is not the case, which is really the only logical position.

We could not be sure in this first version of the dataset. But that's the goal. First, we must determine the dimensions of diversity. We do this by starting with the data and coding schemes as in this version. Then we'll iterate. Hope we bring the wider research community and the industry into the process.

In other words, it's a work in progress. But the same goes for all science and, despite frequent missteps and broken promises, facial recognition is without a doubt a technology with which we will all commit ourselves in the future, whether we like it or not. no.

All AI systems have the same quality as the data on which they were built. As a result, improvements to the data will be felt for a long time. Like any other set, DiF will likely go through iterations to correct gaps, add more content, and incorporate suggestions or requests from researchers who use them. You can request an access here.

Source link