[ad_1]

Making the VR experience simple and portable was the main goal of the Oculus Quest, and it definitely accomplished. But going from things in the room to your headset to your headset tracking things in the room was a complex process. I talked with Facebook Mike Schroepfer ("Schrep") about the journey from "outside-in" to "inside-out."

When you move your head and hands around with a VR headset and controllers, some parts of the system has got to be exactly where you are at all times. There are two ways this is attempted.

LEDs are closely related to LEDs closely, looking at the devices and their embedded LEDs. The other is to have the sensors on the headset itself, which watches for signals in the room – looking from the inside out.

Both have their merits, but if you want to be a wireless, your best bet is inside-out, since you can not do anything else. to the experience.

Facebook and Oculus set a goal a few years back to achieve not just inside-out tracking, but make it as good or better than the wired systems that run on high-end PCs. And it would be to run anywhere, not just in a set scene with boundaries or something, and do so within seconds of putting it on. The result is the impressive Quest headset, which succeeds with flying colors at this task (though it is not much of a leap in others).

What's impressive about it is not only that, but it's impressive that it's able to do exactly that, but that it can not be done in real time with a fraction of the power of an ordinary computer.

"I'm unaware of any system that's anywhere near this level of performance," said Schroepfer. "In the early days there were a lot of debates about whether it would work or not."

Our hope is for the long run, for most consumer applications, it's going to be inside-out tracking.

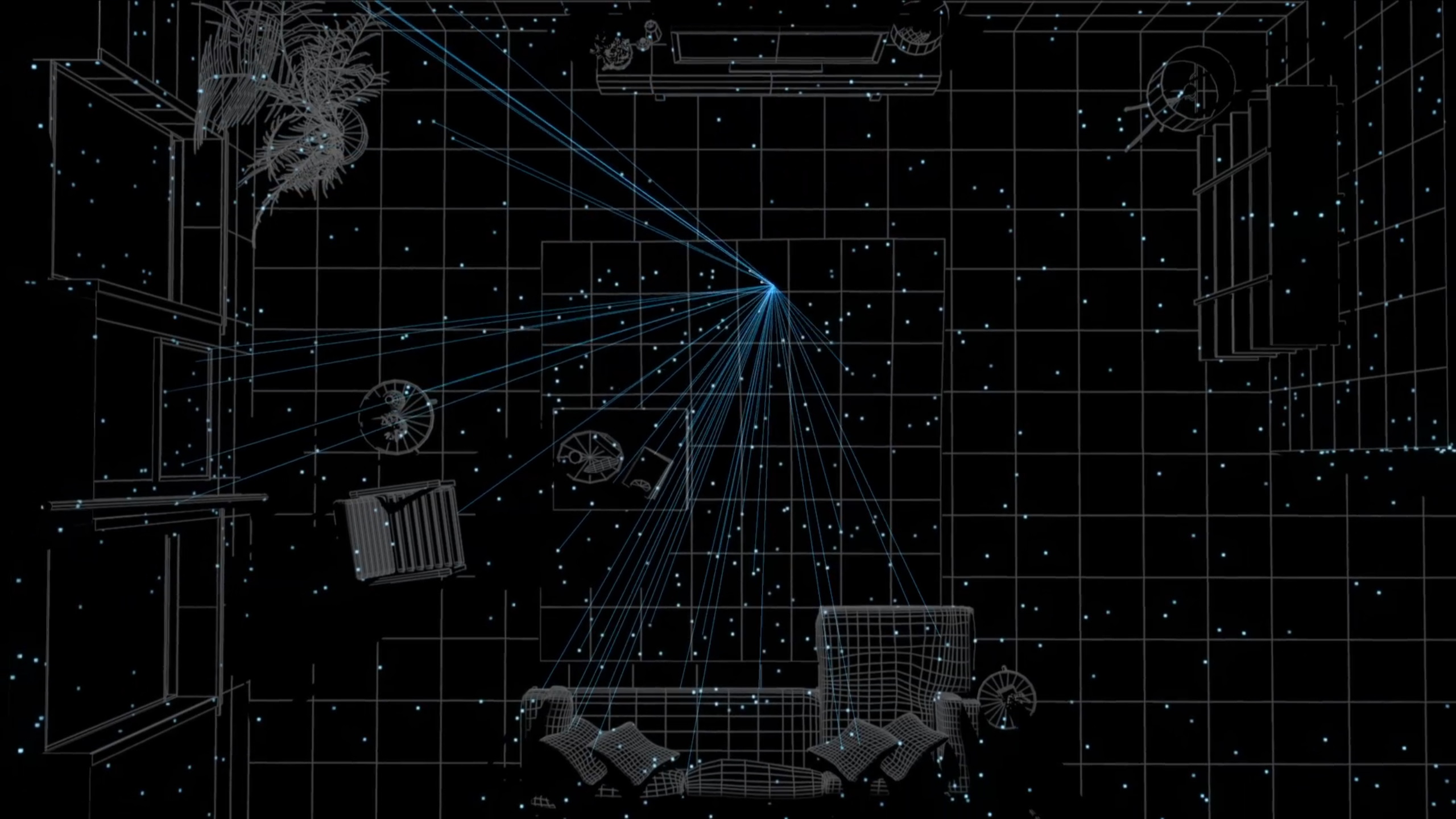

The term for the headset is simultaneous localization and mapping, or SLAM. It is important to know where you are in this world. Naturally robots have been doing this for some time, but they do not use this software. All the new headsets would have been ordinary cameras.

"In a warehouse, I can make sure my lighting is right, I can put trust on the wall, which are markers that can help reset things if I get errors – that's like a dramatic simplification of the problem, you know?" Schroepfer pointed out. "I'm not asking you to put up tricks on your walls. We do not make you put QR codes or your GPS coordinates around your house.

"It's never seen before, and it just has to work. And in a relatively constrained computing environment – we've got a mobile CPU in this thing. And most of that mobile CPU is going to the content, too. The robot is not playing Beat Saber at the same time it's cruising though the warehouse. "

It's a difficult problem in many dimensions, then, which is why the team has been working on it for years. Ultimately several factors came together. One was simply that mobile chips became so powerful that something like this is even possible. Facebook goal can not really take credit for that.

Yann Lecun and others there are more important than ever before. Machine learning models frontload a lot of the necessary processing for computer vision problems, and the resulting inference engines are well understood. Putting efficient, edge-oriented machine learning to work this problem closer to having a possible solution.

Most of the labor, however, went into the complex interactions of the multiple systems that interact in real time to the SLAM work.

"I wish I could tell you it's just this really clever formula, but there's lots of bits to get this to work," Schroepfer said. "For example, you have an IMU on the system, an inertial measurement unit, and that runs at a very high frequency, maybe 1000 Hz, much higher than the rest of the system [i.e. the sensors, not the processor]. But it has a lot of error. And then we run the tracker and mapper on separate threads. And actually we multi-threaded the map, because it's the most expensive part [i.e. computationally]. Multi-threaded programming is a tool to begin with, but you do not know how to do it.

Schroepfer caught himself here; "I'd like to spend all the time through all the grungy bits."

Part of the process was also extensive testing, for which they used a commercial motion tracking rig as ground truth. They'd be playing with the headset and controllers, and using the OptiTrack setup measure the precise motions made.

To see how the algorithms and sensing system performed, they are basically playing a role in the production of data from the IMU, and any other relevant metrics. If the simulation is close to the ground truth they'd externally, good. If it was not, the machine learning system would adjust its parameters and it would run the simulation again. OptiTrack rig had recorded.

Ultimately it is better than the standard Rift headset. Years after the original, no one would buy a headset that was a step down in any way, no matter how much cheaper it was.

"It's one thing to say," "said Schroepfer. "As we got towards the end of development, we actually had a passionate couple Beat Saber players on the team, and they would play on the Rift and on the Quest. And the goal was, the same person should be able to get the same high score or better. That was a good way to reset our micro-metrics and say, well we are doing this.

the computer vision team here, they're pretty bullish on cameras with really powerful algorithms behind them being the solution to many problems.

It does not hurt that it's cheaper, too. Lidar is expensive enough that they are implementing it, and time-of-flight or structured-light approaches like Kinect also bring the cost up. Yet they massively simplify the problem, being 3D sensing tools to begin with.

"What we said was, can we get just as good as that? Because it will dramatically reduce the cost of this product, "he said. "When you're talking to the computer vision team, they're pretty bullish on cameras with really powerful algorithms behind them being the solution to many problems. So we hope for the long run, for most consumer applications, it's going to be inside-out tracking. "

I pointed out that this is not a good idea for a healthy industry, and that it can not do much to solve a more multi-layered problem.

Schroepfer that there are basically three problems facing VR adoption: cost, friction, and content. Cost is self-explanatory, but it would be wrong to say it's got a lot cheaper over the years. Playstation VR established a low-cost early entry on goal "real" VR has remained expensive. Friction is how difficult it is to get from "open the box" to "play a game," and historically has been a sticking point for VR. Oculus Quest addresses both these issues quite well, being at $ 400 and as we review it very easy to just pick up and use. All that computer vision work was not for nothing.

Content is still thin on the ground, though. There have been some hits, like Superhot and Beat Saber, but nothing to really draw crowds to the platform (if it can be called that).

"What we're seeing, we're going to get these heads out, and we're going to be able to come up with all sorts of creative ideas. I think we are in the early stages – these platforms take some time to marinate, "Schroepfer admitted. "I think everyone should be patient, it's going to take a while. But this is the way we're approaching it, we're just going to keep plugging away, building better content, better experiences, better headsets as fast as we can. "

[ad_2]

Source link