[ad_1]

There is a point in a foray into new technological territory where you realize that you may have embarked on a Sisyphean task. Looking at the multitude of options available to support the project, you research your options, read the documentation, and start working – only to find that in fact just define the problem may be more work than finding the actual solution.

Reader, this is where I found myself after two weeks on this machine learning adventure. I became familiar with the data, tools, and known approaches to problems with this kind of data, and tried several approaches to solve what on the surface seemed like a simple machine learning problem: performance-based. past, could we predict if a given Ars title will be a winner in an A / B test?

Things did not go particularly well. In fact, as I was finishing this article, my last attempt showed our algorithm to be about as accurate as a draw.

But at least it was a start. And in the process of getting there, I learned a lot about cleaning up and preprocessing the data that goes into any machine learning project.

Preparing the battlefield

Our data source is a log of the results of over 5,500 headline A / B tests over the past five years, that’s about as long as Ars has been doing this kind of shooting for every article that’s published. Since we have labels for all of this data (i.e. we know if he won or lost his A / B test), this would appear to be a supervised learning problem. All I really needed to do to prepare the data was make sure it was formatted correctly for the model I chose to use to build our algorithm.

I’m not a data scientist, so I wasn’t going to be building my own model anytime this decade. Fortunately, AWS provides a number of predefined templates suitable for the word processing task and designed specifically to operate within the confines of the Amazon cloud. There are also third-party models, such as Hugging Face, which can be used in the SageMaker universe. Each model seems to need data supplied to it in a particular way.

The choice of model in this case depends largely on the approach we are going to take to the problem. Initially, I saw two possible approaches to train an algorithm to get a probability of success for a given title:

- Binary classification: we simply determine the probability of the stock falling in the “winner” or “loser” column based on previous winners and losers. We can compare the likelihood of two headlines and pick the stronger candidate.

- Classification into multiple categories: We try to classify titles according to their click-through rate in several categories, ranking them from 1 to 5 stars, for example. We could then compare the scores of the leading candidates.

The second approach is much more difficult, and there is a major concern with either method that makes the second even less tenable: 5,500 tests, with 11,000 titles, is not a lot of. data to work with in the AI / ML grand scheme of things.

So I went with the binary classification for my first try, as it seemed the most likely to be successful. It also meant that the only data point I needed for each title (next to the title itself) was whether they won or lost the A / B test. I took my source data and reformatted it into a comma separated values file with two columns: titles in one and “yes” or “no” in the other. I also used a script to remove all HTML markup from titles (mainly a few tags and some tags ). With the data almost entirely stripped down to the essentials, I uploaded it to SageMaker Studio so that I could use the Python tools for the rest of the prep.

Then I had to choose the type of model and prepare the data. Again, much of the data preparation depends on the type of model the data will be fed into. Different types of natural language processing models (and problems) require different levels of data preparation.

After that comes “tokenization”. AWS technology evangelist Julien Simon explains it this way: “Data processing must first replace words with tokens, individual tokens. A token is a machine-readable number that replaces a string of characters. “So ‘ransomware’ would be word one,” he said, “” crooks “would be word two,” configuration “would be word three … so a sentence then becomes a sequence of tokens and you can l ‘feed into a deep learning model and let it learn which are the good ones, which are the bad ones.

Depending on the particular problem, you might want to delete some data. For example, if we were trying to do something like sentiment analysis (i.e. determining whether a given Ars title was of a positive or negative tone) or grouping the titles based on their topic, I would probably want to narrow the data down to the most relevant content by removing “stop words”, common words that are important for grammatical structure but don’t tell you what the text actually says (like most articles).

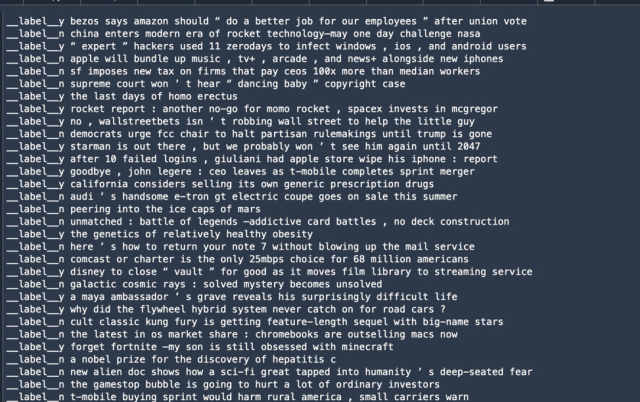

nltk). Notice that punctuation is sometimes wrapped in words like a sign; this should be cleaned up for some use cases.However, in this case, stop words were potentially important parts of the data. After all, we’re looking for attention-grabbing title structures. So I chose to keep all the words. And on my first attempt at training, I decided to use BlazingText, a word processing model that AWS demonstrates in a classification problem similar to the one we are attempting. BlazingText requires that the data “labels” (the data that calls for a particular part of the classification of the text) be preceded by “”__label__And instead of a comma delimited file, the label data and the text to be processed are put on a single line in a text file, like this:

Another part of preprocessing data for ML supervised learning involves dividing the data into two sets: one for training the algorithm and one for validating its results. The training data set is usually the largest set. Validation data is typically created from about 10 to 20 percent of the total data.

There has been a lot of research into what actually is the right amount of validation data – some of this research suggests that the sweet spot is more about the number of parameters in the model used to create the algorithm rather than the number of parameters. overall size of the data. In this case, since there was relatively little data to process by the model, I figured my validation data would be 10%.

In some cases, you may want to retain another small data pool to test the algorithm. after it’s validated. But our plan here is to eventually use the Live Ars titles to test, so I skipped that step.

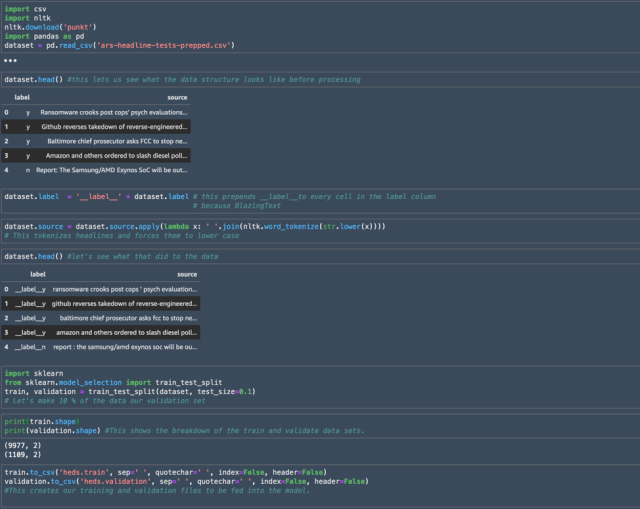

To do my final data preparation, I used a Jupyter notebook – an interactive web interface to a Python instance – to turn my two-column CSV into a data structure and process it. Python has specific data manipulation and data science toolkits that make these tasks fairly straightforward, and I’ve used two in particular here:

pandas, a popular data analysis and manipulation module that does wonders for slicing and slicing CSV files and other common data formats.sklearn(orscikit-learn), a data science module that eliminates much of the work of preprocessing machine learning data.nltk, the natural language toolkit — and more specifically, thePunktsentence tokenizer to process the text of our titles.- the

csvmodule for reading and writing CSV files.

Here is some of the notepad code I used to create my training and validation sets from our CSV data:

I started by using pandas to import the data structure from the CSV file created from the initially cleansed and formatted data, calling the resulting object “dataset”. Using the dataset.head() The command gave me a preview of the headers of each column imported from the CSV file, as well as a preview of some of the data.

The pandas module allowed me to add the string “__label__“to all values in the label column as required by BlazingText, and I used a lambda function to process the titles and force all words to lowercase. Finally, I used the sklearn module to split the data into two files which I would provide to BlazingText.

[ad_2]

Source link