[ad_1]

A hot potato: Would you leave someone over the years of activity on social media if it increased your chances of getting a job? This is the basic premise of Predictim, an artificial intelligence technology that analyzes the custodian's Facebook, Instagram and Twitter accounts for a "risk assessment". It is therefore not surprising that the sites on which she is supporting the ferment.

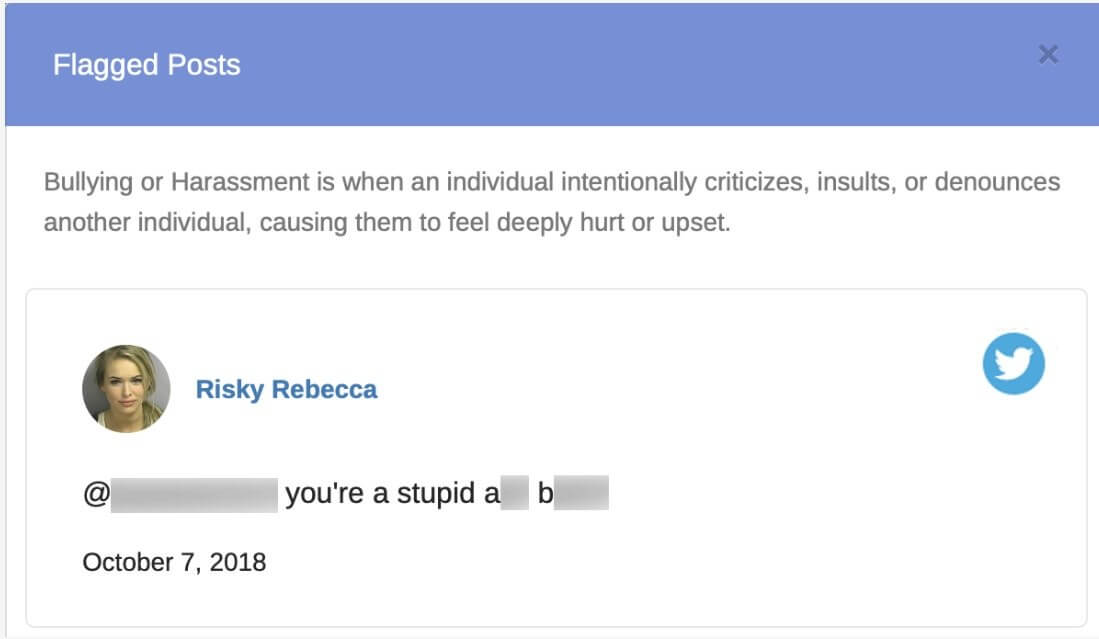

Predictim, funded by the Berkeley Skydeck Accelerator at the University of California, uses natural language processing algorithms and computer vision to run through years of social media publications. The system then generates a report containing a risk assessment score, marked publications and an assessment of four different personality categories: substance abuse, bullying and harassment, explicit content and disrespectful attitude.

Babysitters must agree to the parents' request for Predictim to access their social media accounts, but the system has been the subject of much criticism since its launch last month. It's not only invasive, but letting an algorithm decide if a person is employable is worrying. Some tweets showing a "disrespectful attitude" should not potentially put someone on the blacklist of a sector. In addition, it is always possible that a publication is taken out of context and even if a person is a dangerous guardian, it is unlikely that she boasts of her use of heroin and its major crimes on Facebook.

As stated by the BBC, Facebook has canceled most of Predictim's access to users last month, after finding that it violated its policy regarding the use of personal data. The social network is in the process of deciding whether to totally block the company's platform -Predictim says it continues to extract public data from Facebook for its reports.

Twitter went one step further by telling the BBC that it had recently blocked Predictim's access to users of the site. "We strictly prohibit the use of Twitter data and APIs for monitoring, including for background checks," a spokeswoman said. "When we learned about Predictim's services, we conducted a survey and revoked their access to Twitter's public APIs."

Predictim's black box algorithm analyzes the social network accounts of babysitters and reduces them to a single adjustment. Beyond disgusting. Carrément the bad.

Social media algorithms make you want to be the worst / the worst possible, recruitment algorithms refuse you. https://t.co/fi0NlZsTBV

– DHH (@dhh) November 24, 2018

Predictim said that he used human reviewers to check the reported publications and prevent false positives. He charges $ 25 for a single sweep, while $ 40 brings you $ 2 and $ 50 for three. The company said it is in talks with major "shared economy" companies to provide an audit to ride drivers or hosting service hosts.

The scraping of profile data is a controversial subject. LinkedIn remains in a legal battle with hiQ Labs, which has used the public information of network members to allow companies to monitor their employees, thus determining "skill gaps or rolling risks for several months at a time. advanced".

Image Credit: Shutterstock / Pindyourine Vasily

[ad_2]

Source link