[ad_1]

You’re here

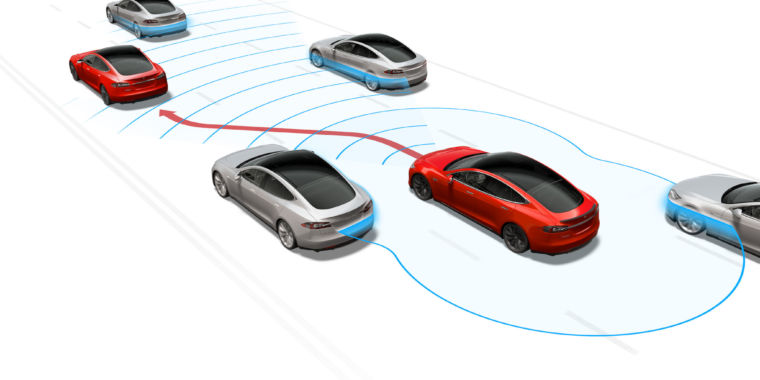

Over the weekend, Tesla expanded access to the latest version of the company’s highly controversial $ 10,000 automated driving feature. As is the Tesla way, CEO Elon Musk took to Twitter to break the news, saying owners could start requesting beta access as early as Saturday. However, Musk noted that “FSD 10.1 needs an additional 24 hours of testing, so tomorrow night.”

For the moment, access to the latest version of the software is in no way guaranteed. Instead, drivers must agree to have their driving monitored by Tesla for seven days.. If they are considered safe drivers, they can gain access to the experimental software. In contrast, autonomous vehicle manufacturers like Argo AI give their test drivers extensive training to ensure they are able to safely oversee experimental autonomous driving systems while they are being tested on vehicles. public roads, which is a totally different task than driving a vehicle safely manually.

Better not to brake

Tesla says five factors affect whether you’re a driver safe enough to then perform the task of overseeing an unfinished automated driving system that is currently under investigation by the National Highway Traffic Safety Administration for a dozen accidents. in parked emergency vehicles, including one fatality.

Specifically, Tesla is leveraging the connected nature of its cars and insurance product. This involves tracking the number of forward collision warnings per 1,000 miles, hard braking events (above 0.3G), any aggressive turns (above 0.4G), if the driver follows other vehicles too closely (in less than a second) and any forced disengagement of the autopilot. Tesla then uses this information and actuarial data to calculate a predicted collision frequency, and that number is in turn converted to give a safety score of 0 to 100.

Needless to say, the reactions to this plan have not been entirely positive. Last week, authorities in San Francisco, which has perhaps the highest concentration of Tesla in the world, raised concerns about the increased number of cars testing FSD 10.1 on their streets. California regulators are also investigating whether Tesla’s claim that “fully autonomous driving” is misleading.

-

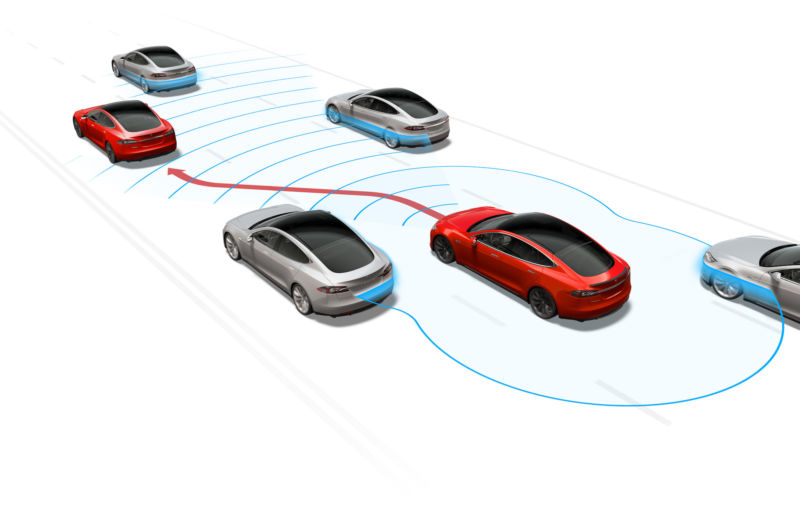

Twitter user and Tesla owner Stephen Pallotta posted videos of FSD Beta 10.1 in action and they are not encouraging.

Twitter

-

Ignoring the fact that being a safe driver is by no means a guarantee that you are also a good supervisor of an experimental robot driving system, does this behavior make you feel safe?

Twitter

These concerns are well founded, based on early reports from Tesla owners. Podcaster Stephen Pallotta posted videos on Twitter showing how his car behaved with the latest version. One shows that he is crossing a double yellow line in oncoming traffic, others complaining about phantom braking events and even a lack of slowdown for pedestrians in a crosswalk.

Perhaps more concerning are reports from an investor called Gary Black, whose tweets showed he was able to increase his security score from 91 to 95 by “yellow lights on”, “do not brake for the cyclist who crossed red at an intersection” and “while passing stop signs”. We would ask Tesla for a comment on those accounts, but the company disbanded its press office in late 2020, so there’s no one to ask.

So, as Sergeant Esterhaus said in The blues of the hill street, “Let’s be careful over there.”

[ad_2]

Source link