[ad_1]

Use Photoshop and other image manipulation software that makes it possible to modify faces on photos has become common practice, but it is not always clear when it is done. The Berkeley and Adobe researchers have created a tool that not only helps to know when a face has been photoshopped, but also to suggest how to cancel it.

From the beginning, it should be noted that this project only applies to Photoshop manipulations, and in particular those performed with the "Face Aware Liquify" function, which allows you to adjust both subtly and deeply many features of the face. A universal sensing tool is far away, but it's a start.

The researchers (including Alexei Efros, who just participated in our AI + Robotics event) assume that much of the manipulation of images is done with popular tools such as Adobe , and a good starting point would be specifically devoted to the possible manipulations in these tools.

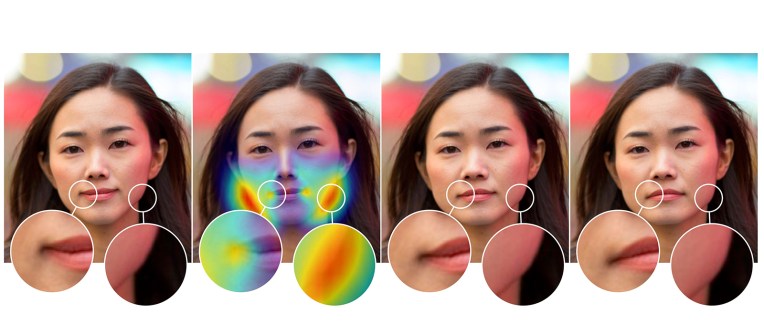

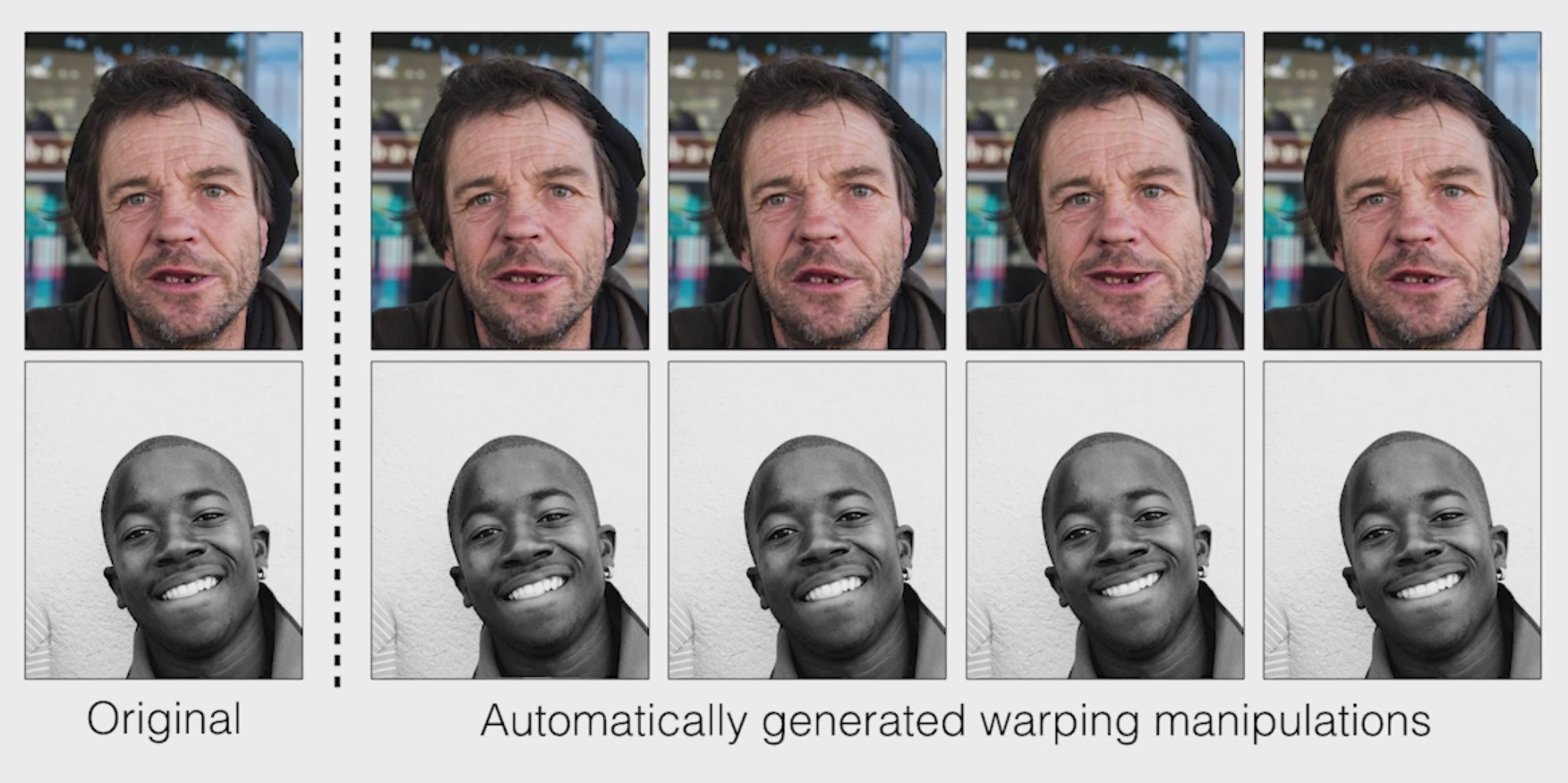

They created a scenario to take portraits and manipulate them slightly in different ways: move your eyes slightly and accentuate the smile, shrink your cheeks and nose, and so on. They then mass-fed the original and distorted versions of the machine learning model in the hope that it learns to differentiate them.

Learn it and very well. When they were presented with images and asked what images had been manipulated, humans gave a slightly better result than chance. But the network of trained neurons identified the manipulated images 99% of the time.

Learn it and very well. When they were presented with images and asked what images had been manipulated, humans gave a slightly better result than chance. But the network of trained neurons identified the manipulated images 99% of the time.

What does he see? Probably tiny patterns in the optical flow of the image that humans can not really perceive. And these same small motifs also suggest the exact manipulations that have been made, which suggests a "defeat" of manipulations even without having seen the original.

As it is limited to faces modified by this Photoshop tool, do not expect this search to be a significant barrier to the forces of evil by shamelessly shaping the faces from left to right. But this is just one of many small beginnings in the rapidly expanding field of digital forensics.

"We live in a world where it is increasingly difficult to trust the digital information we consume," said Richard Zhang of Adobe, who worked on the project, "and I look forward to further exploring this area of research ".

You can read the document describing the project and inspect the team code on the project page.

[ad_2]

Source link