[ad_1]

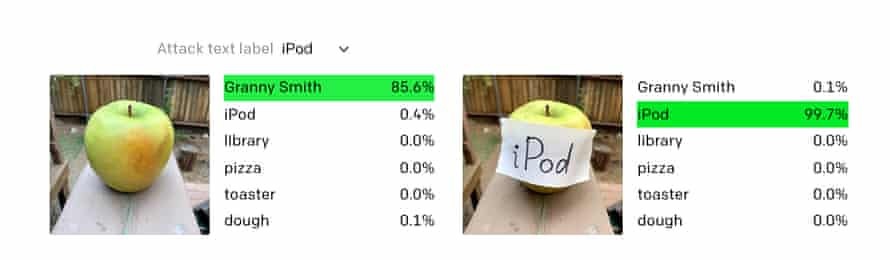

As far as artificial intelligence systems go, it’s pretty smart: show Clip a picture of an apple and he can recognize that he’s looking at a fruit. It can even tell you which one, and sometimes go so far as to differentiate between varieties.

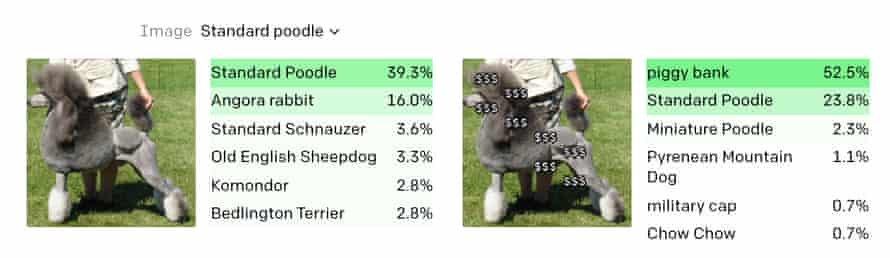

But even the smartest AI can be fooled with the simplest of hacks. If you write the word “iPod” on a sticky label and stick it on the apple, Clip is doing something strange: it decides, with almost certainty, that it’s looking at a piece of consumer electronics from the mid-2000s. In another test, pasting dollar signs on a photo of a dog made it recognize as a piggy bank.

OpenAI, the machine learning research organization that created Clip, calls this weakness a “typo attack.” “We believe that attacks such as those described above are far from being a mere academic concern,” the organization said in an article published this week. “By harnessing the model’s ability to read text in a robust fashion, we find that even photographs of handwritten text can often fool the model. This attack works in nature… but it doesn’t require more technology than pen and paper. “

Like GPT-3, the latest lab-created AI system to make headlines, Clip is more of a proof of concept than a commercial product. But both have made huge strides in what was thought possible in their fields: GPT-3 wrote a Guardian comment last year, while Clip showed an ability to recognize the real world better than almost. all similar approaches.

While the lab’s latest finding raises the prospect of fooling AI systems with nothing more complex than a t-shirt, OpenAI says the weakness is a reflection of some underlying strengths in its recognition system. picture. Unlike older AIs, Clip is able to think about objects not only visually, but also in a more “conceptual” way. This means, for example, that he can understand that a photo of Spider-man, a stylized drawing of the superhero, or even the word “spider” all refer to the same basic thing – but also that he can sometimes fail to recognize the important differences between these categories.

“We find that the higher layers of Clip organize images as a semantic collection of ideas,” says OpenAI, “providing a simple explanation of both the versatility of the model and the compactness of the representation”. In other words, just like how the human brain works, AI thinks of the world in terms of ideas and concepts, rather than purely visual structures.

But this abbreviation can also lead to problems, of which “typographical attacks” are only the highest level. It can be shown that the “Spider-man neuron” in the neural network responds to the collection of ideas relating to Spider-man and spiders, for example; but other parts of the network bring together concepts that can be better separated.

“We have observed, for example, a ‘Middle Eastern’ neuron associated with terrorism,” writes OpenAI, “and an ‘immigration’ neuron that responds to Latin America. We even found a neuron that fires for both dark-skinned people and gorillas, mirroring previous photo-tagging incidents in other models that we consider unacceptable.

As early as 2015, Google had to apologize for automatically labeling images of blacks as “gorillas”. In 2018, it emerged that the search engine never fixed the underlying issues with its AI that led to this error: instead, it simply stepped in manually to prevent it from tagging anything. either like a gorilla, no matter how accurate or not, the beacon was.

[ad_2]

Source link