[ad_1]

Content moderators are responsible for determining what we see and what we do not do on social media.

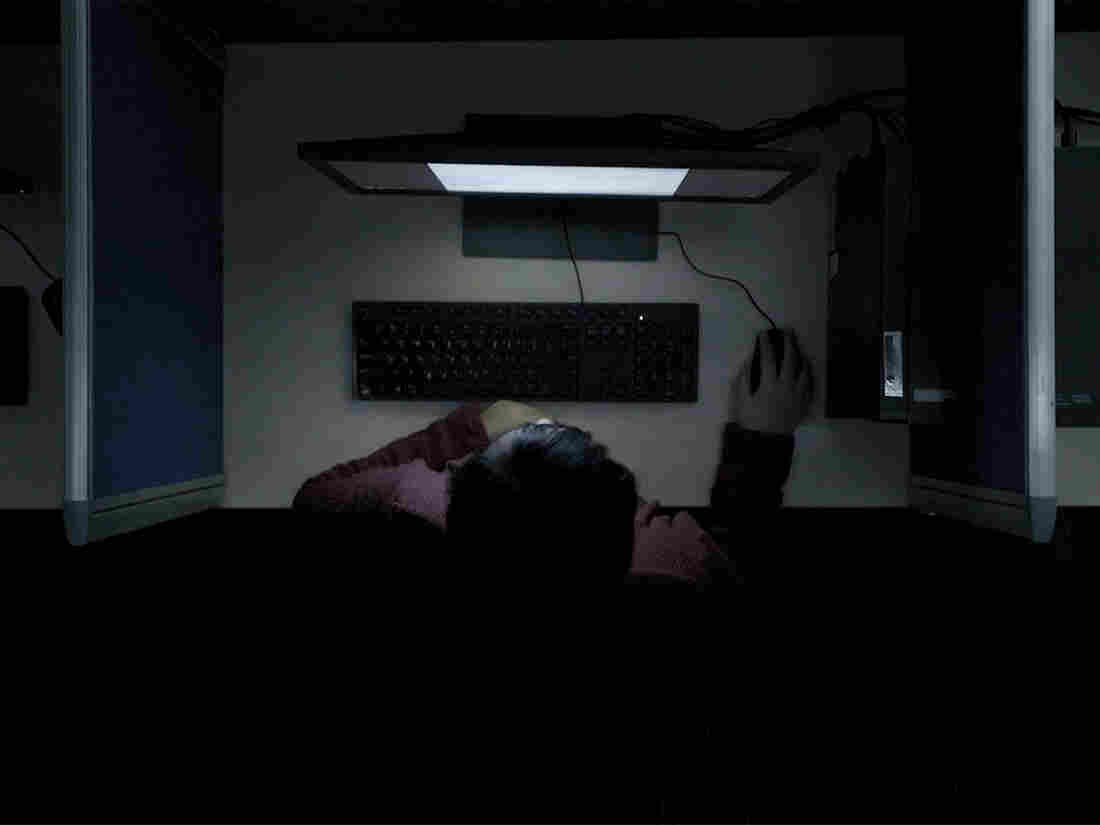

Courtesy of gebrueder beetz filmproduktion

hide the legend

activate the legend

Courtesy of gebrueder beetz filmproduktion

Content moderators are responsible for determining what we see and what we do not do on social media.

Courtesy of gebrueder beetz filmproduktion

Thousands of content moderators work tirelessly to ensure that Facebook, YouTube, Google and other online platforms remain free of toxic content. This can include trolling, sexually explicit photos or videos, threats of violence, etc.

These efforts – led by humans and algorithms – have been hotly contested in recent years. In April, Mark Zuckerberg explained before a congressional committee how Facebook would work to reduce the prevalence of propaganda, hate speech and other harmful content on the platform.

"By the end of this year, more than 20,000 people will work on security and content review," Zuckerberg said.

The cleaners, A documentary by directors Hans Block and Moritz Riesewieck seeks to shed light on how this work is done. The film follows five content moderators and reveals their tasks.

"I've seen hundreds of beheadings, sometimes they're lucky because it's a very sharp blade to which they are accustomed," says a moderator of the content in an excerpt from the movie.

Block and Riesewieck have explored more and more the harsh realities associated with the role of moderator of content in an interview with All things Considered.

Highlights of the interview

Typical day of a Facebook content moderator

They see all these things that we do not want to see online, on social media. It could be terror, it could be beheading videos like those whose voice spoke before. It could be pornography, sexual abuse, necrophilia, on the one hand.

On the other hand, there could be some content that can be useful for political debates, or to raise awareness about war crimes, and so on. They must therefore moderate thousands of photos every day and be quick to reach the score of the day. … it's sometimes as many photos a day. And then they have to decide to delete it or let it stay in place.

On Facebook's decision to remove photo "napalm girl", Pulitzer Prize winner

This content moderator, he decides that he would prefer to delete it because it represents a young naked child. He therefore applies this rule against the nakedness of children, which is strictly prohibited.

It is therefore always necessary to distinguish between as many different cases. … There are still so many gray areas in which content moderators sometimes told us that they had to decide by their instincts.

On the weight to distinguish the harmful content from the news or art

It's a huge leap – it's so complex to distinguish between these different types of rules. … These young Filipino workers have three to five days of training, which is not enough to do a job like this.

On the daily impact of exhibitors on toxic contents

Many young people are extremely traumatized by work.

The symptoms are very different. Sometimes people have told us they are afraid to go to public places because they observe terrorist attacks every day. Or they are afraid to maintain an intimate relationship with his boy or his girlfriend because they watch videos of sexual abuse every day. So it's a bit the effect of this work has ….

Manila [capital of the Philippines] This is a place where analogous toxic waste has been sent from the western world, sent there for years on container ships. And today, digital waste is brought there. Now, thousands of young moderators of content in air-conditioned office towers are passing through the infinite poisonous sea of images and tons of intellectual nonsense.

Emily Kopp and Art Silverman have edited and produced this story for broadcast. Cameron Jenkins produced this story for the digital world.