[ad_1]

In 2014, researchers at MIT Media Lab designed an experiment called Moral Machine. The idea was to create a platform similar to a game that would centralize people's decisions on how autonomous cars should prioritize life in different variants of the "tramway problem". In the process, the generated data would provide insight into the collective ethical priorities of different cultures.

Researchers have never predicted the viral reception of the experience. Four years after the implementation of the platform, millions of people in 233 countries and territories recorded 40 million decisions, making it one of the largest studies ever done on global moral preferences.

A new article published in Nature presents the analysis of these data and reveals how much intercultural ethics differ according to culture, economy and geographical location.

The classic problem with the streetcar is this: you see a van on the run that is about to crash and kill five people. You have access to a lever that could tip the truck to another lane, where a different person would meet an untimely end. Do you have to pull the lever and use a life to save five?

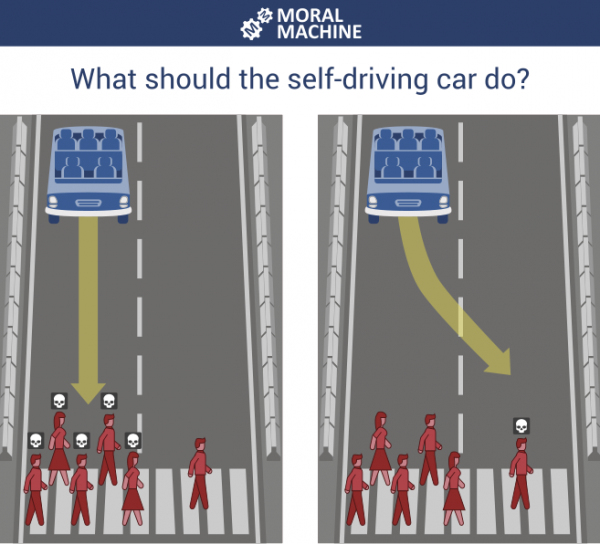

The Moral Machine was inspired by this idea to test nine different comparisons that polarize people: if an autonomous car drove humans first to pets, passengers to pedestrians, more lives to fewer people, more women of men, younger, older status on stockings, law-abiding benders? And finally, should the car deviate (act) or stay on course (inaction)?

An example of a question asked to participants of Moral Machine.

Moral Machine

Rather than offering individual comparisons, the experiment presented participants with various combinations, including whether an autonomous car should continue to kill three elderly pedestrians or infiltrate a barricade to kill three young passengers.

The researchers found that countries' preferences differed a lot, but they were also strongly correlated with culture and the economy. For example, participants in collectivist cultures, such as China and Japan, save less young than older – perhaps the researchers hypothesized, because of the increased emphasis respect for the elderly.

Similarly, participants from poorer countries with weaker institutions are more tolerant of geawalkers than pedestrians who cross legally. And participants from countries with high levels of economic inequality show larger gaps between the treatment of individuals with high and low social status.

And, with regard to the essential question of the tramway problem, the researchers discovered that the considerable number of people at risk was not always the dominant factor in choosing the group to avoid. The results showed that participants in individualist cultures, such as the United Kingdom and the United States, put more emphasis on saving more lives given all the other choices – perhaps according to the authors, because of the greater importance given to the value of each individual.

Countries close to each other have also shown narrower moral preferences, with three dominant groups located in the west, east and south.

The researchers acknowledged that the results could be skewed, given that the study participants themselves were selected and therefore more likely to be connected to the internet, to benefit from a high social status and to be aware of the technologies. But those who are interested in driving autonomous cars could also have these characteristics.

The study has interesting implications for countries currently testing autonomous cars, as these preferences may play a role in the design and regulation of these vehicles. For example, automakers may find that Chinese consumers would more easily enter a car that is protected from pedestrians.

But the authors of the study emphasized that the results are not meant to dictate how different countries should act. In fact, in some cases, the authors felt that technologists and policy makers should take precedence over the collective public opinion. Edmond Awad, an author of the newspaper, gave the example of social status comparison. "It seems worrying that people have found it good to maintain high status at the expense of lower status," he said. "It's important to say," Hey, we could quantify that "instead of saying," Oh, maybe we should use that. "" He stated that the results, should it be used by the " industry and government as a basis for understanding would react to the ethics of different design and policy decisions.

Awad hopes the results will also help technologists to deepen their thinking about AI ethics beyond autonomous cars. "We have used the cart problem because it is a very good way to collect this data, but we hope the discussion on ethics does not stay in this theme," he said. "The discussion should go to risk analysis – determining who is the most or the least risky – instead of saying who will die or not, but also how the biases occur." How could these results be translating into a more ethical design and regulation of AI is something he hopes to study more in the future.

"In the last two years, more people have started talking about the ethics of AI," Awad said. "More and more people have begun to realize that AI can have different ethical consequences for different groups of people. The fact that we see people getting involved in this business – I think it's something promising. "

Source link