[ad_1]

WWhen you look at a modern blockbuster like The Avengers, it's hard to avoid the feeling that what you're seeing is almost entirely a computer-generated image, from scenery effects to fantastic creatures. But if there is one thing you can count on to be 100% real, it's the actors. We may have virtual pop stars like Hatsune Miku, but there has never been a world-renowned virtual movie star.

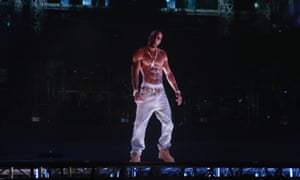

Even this connection with bodily reality, however, is no longer absolute. You may have already seen examples of what is possible: Peter Cushing (or his image) appearing in Rogue One: a story of Star Wars more than 20 years after his death, or Tupac Shakur occurring beyond the fell to Coachella in 2012. saw the terrifying potential of deepfakes – manipulated images that could play a dangerous role in the phenomenon of false news. Jordan Peele's remarkable fake Obama video is a key example. Could technology soon make professional actors redundant?

Like most of the above examples, the virtual Tupac is a digital human and was produced by the Digital Domain special effects company. Such technology is becoming more and more advanced. Darren Hendler, director of the company's digital human group, explains that it is actually a "digital prosthesis", as a combination that a real human being must wear.

"The most important thing in creating a digital human is to get that performance. Someone must be behind all that, "he explains. "There is usually [someone] play the role of the deceased. Someone who will really study his movements, his twitches, his body movements. "

Digital and digitally modified humans are commonplace in modern cinema. Recent examples include aging actors such as Samuel L Jackson in Captain Marvel and Sean Young's image in Blade Runner 2049. And you can almost guarantee the use of digital humans in any video game based on a modern history: Captured actors in motion give their movements realistic characters and facial expressions.

Hendler insists that the interpreter's skills will make or break any digital human, which is of little use without a real person wearing it like a second skin. Virtual humans, on the other hand, could function autonomously, their speech and their expressions being dictated by AI. Their development and use is often limited to research projects and therefore not visible to the public, but it may change soon.

With financing from the UK government's Audience of the Future program, Maze Theory will lead the development of a virtual reality game based on the BBC Peaky Blinders show. As CEO Ian Hambleton explains, the use of "narrative characters of artificial intelligence" promises a whole new experience. "For example, if you become really aggressive and face a character, they will react; and they will change not only what they say, but also their body language, their facial expressions, "he says. An AI "black box" will drive the performances.

Can we one day see aesthetically compelling digital humans combined with virtual humans led by AI to produce fully artificial actors? This could theoretically lead to performance without the need for human actors. Yuri Lowenthal, an actor whose role includes the main role in Spider-Man on PS4, questions: "Your everyday person does not have the task of voluntarily capturing his data on a large scale, like me. When people record all the data of my performances, as well as the details of my face and my voice, what does the future look like? How long will it take before you can create a performance from scratch?

"It's still far enough," says Hendler. "Artificial intelligence is growing so fast that it's very difficult for us to predict … I would say that in the next five to ten years, [we’ll see] things that are able to build semi-plausible versions of a complete facial performance. "

The voice is also a factor, says Arno Hartholt, director of research and development at the Institute of Creative Technologies at the University of Southern California, because it would be very difficult to artificially generate a new performance from clips of the speech of a real actor. "It would have to be like a library of shows," he says. "You need many examples, not only of the natural voice of a voice, but also of the sound of an angry woman. Or maybe it's both angry and hurt, or out of breath. The rhythm of the speech. "

It's not even as simple as collecting a considerable amount of existing performance data, because, as Hartholt points out, many characters played by the same actor will not produce a coherent data set in their speech. The work of the human actor is safe, at least for the moment.

Nevertheless, Hendler believes that the advancement of digital and virtual human technologies will become more visible to the public, literally getting closer to home – literally – over the next 10 years or so: "You can have a big screen on your wall and your own Personal virtual assistant with whom you can talk and interact: a virtual human who has a face and moves around the house with you. For your bed, your fridge, your stove and the games you play. "

"It may sound scary, but some things have been coming for a while."

[ad_2]

Source link