[ad_1]

The advances in astronomical observation over the last century have allowed scientists to build a remarkably successful model of how the cosmos works. It makes sense – the better you can measure something, the more you learn.

But when it comes to the speed of expansion of our Universe, some new cosmological measurements make us even more confused.

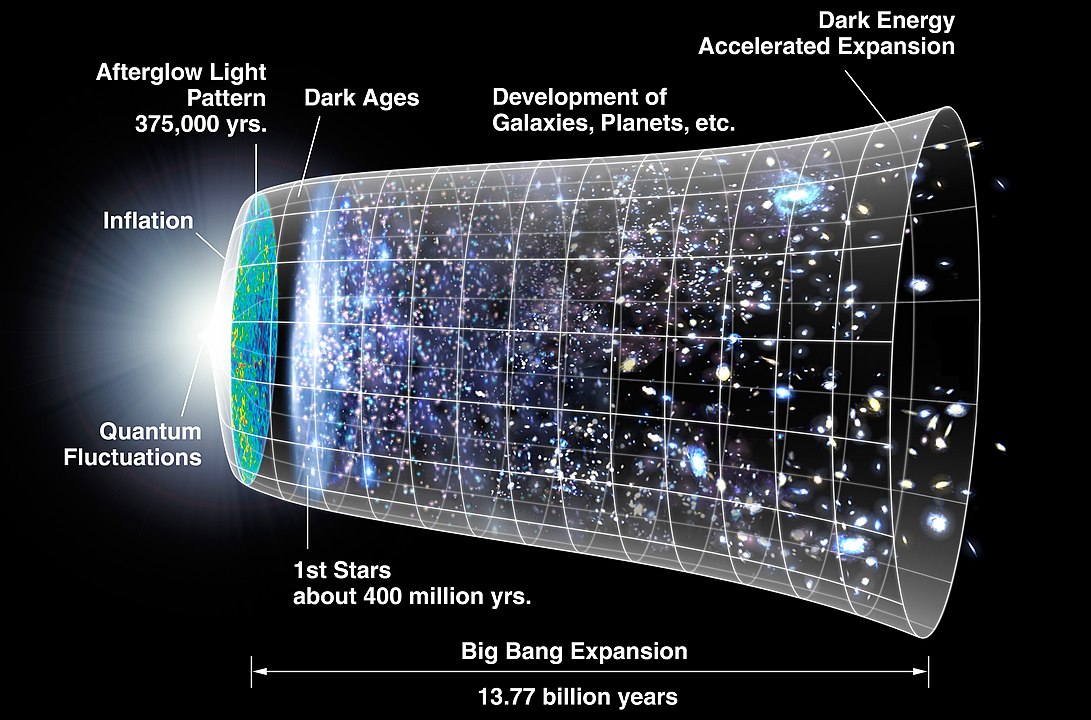

Since the 1920s, we know that the Universe is expanding – the further away a galaxy is, the further it gets away from us. In fact, in the 1990s, the rate of expansion accelerated.

The current rate of expansion is described by what is called the "Hubble constant" – a fundamental cosmological parameter.

Until recently, it seemed like we were converging on an accepted value for Hubble's Constant. But a mysterious difference appeared between the values measured with the aid of different techniques.

Now, a new study, published in Science, presents a method that can help solve the mystery.

The problem of precision

The Hubble constant can be estimated by combining measures of distances to other galaxies with the speed at which they distance themselves from us.

At the turn of the century, scientists agreed that the value was about 70 kilometers per second per megaparsec – a megaparsec equals a little more than 3 million light-years away. But in recent years, new measures have shown that this might not be a definitive answer.

If we estimate the Hubble constant with the help of observations from the local universe of today, we get a value of 73. But we can also use observations of the glow of the Big Bang – the "cosmic microwave background" – to estimate the Hubble constant.

But this "early" measure of the universe gives a lower value of about 67.

It is disturbing to note that both measures are sufficiently precise that there is a problem. Astronomers call this euphemistically "tension" in the exact value of the Hubble constant.

If you are the type to worry about, the tension indicates an unknown systematic problem with one or both measurements. If you are the type to be enthusiastic, the difference can be a clue to the new physics that we did not know before.

Although this has been very successful so far, our cosmological model may be wrong or at least incomplete.

The expansion of the universe. (NASA / WMAP)

The expansion of the universe. (NASA / WMAP)

Distant versus local

To get to the bottom of the differences, we need a better link between the scale of distance between the very local and very distant universe.

The new document presents a neat approach to this challenge. Many estimates of the rate of expansion rely on accurate measurement of distances to objects. But it's really hard to do: we can not just pass a tape measure across the Universe.

A common approach is to use "type 1a" supernovas (explosive stars). These are incredibly bright, so we can see them at a great distance. As we know how bright they should be, we can calculate their distance by comparing their apparent brightness to their known brightness.

To derive the Hubble constant from supernova observations, they must be calibrated on an absolute distance scale because their total uncertainty is still quite large.

Currently, these "anchors" are very close (and therefore very precise) distance markers, such as Cepheid Variable stars, which periodically brighten and darken.

If we had absolute distance anchors further into the cosmos, the supernova distances could then be calibrated more precisely on a wider cosmic beach.

Remote anchors

The new work dropped some new anchors by exploiting a phenomenon called gravitational lens.

Looking at how light from a background source (such as a galaxy) bends under the effect of the gravity of a massive object in front of it, we can determine the properties of this object in the foreground.

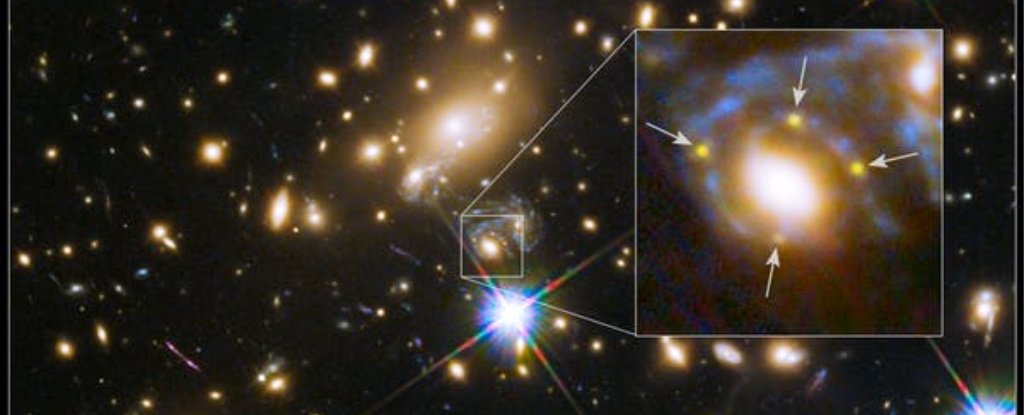

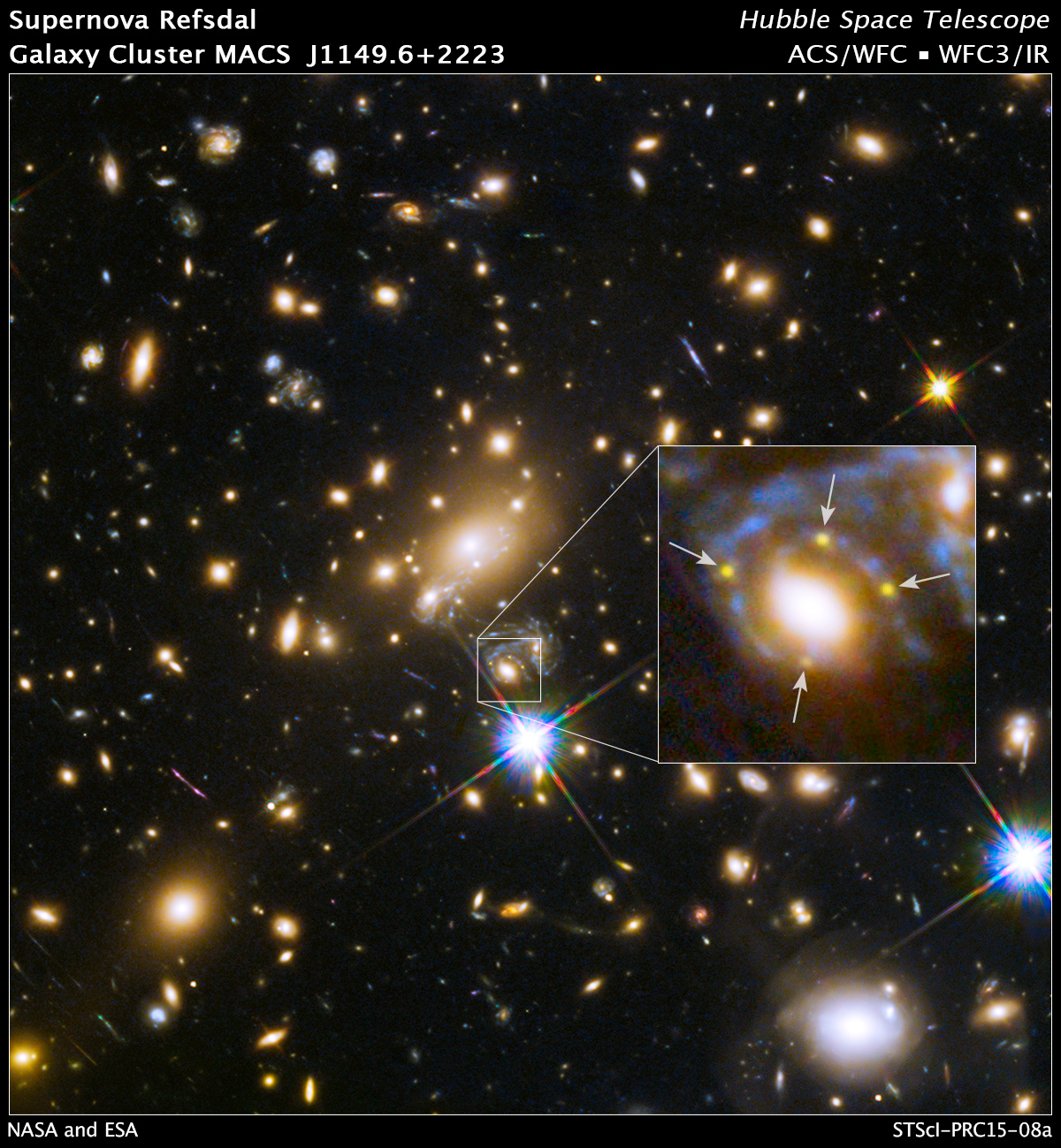

A galaxy (center of the box) divides the light of an exploding supernova into four yellow spots. (NASA / Hubble)

A galaxy (center of the box) divides the light of an exploding supernova into four yellow spots. (NASA / Hubble)

The team studied two galaxies that capture light from two other background galaxies. The distortion is so strong that several images of each background galaxy are projected around the baffles in the foreground (as in the picture above).

The components of the light composing each of these images will have traveled slightly different distances as they travel to Earth as the light bends around the deflector in the foreground. This causes a delay in the arrival time of the light on the target image.

If the brightness of the background is relatively constant, we do not notice this delay. But when the background source himself varies in brightness, we can measure the difference in time of arrival of light. This job does exactly that.

The temporal delay on the objective image is related to the mass of the galaxy in the foreground that deflects light and its physical size. Thus, when we combine the measured time with the mass of the deviating galaxy (which we know), we get an accurate measure of its physical size.

Like a penny held at arm's length, we can then compare the apparent size of the galaxy at physical size to determine the distance, because a fixed-sized object will appear smaller when it is moved away.

The authors present absolute distances of 810 and 1230 megaparsecs for the two deflecting galaxies, with a margin of error of 10 to 20%.

Treating these measurements as absolute distance anchors, the authors again analyze the distance calibration of 740 supernovas from a well-established data set used to determine the Hubble constant. The answer is a little over 82 kilometers per second per megaparsec.

This is quite high compared to the figures mentioned above. But the key point is that with only two anchors of distance, the uncertainty about this value is still very great. What is important, however, is statistically consistent with the value measured from the local universe.

Uncertainty will be reduced by searching and measuring distances from others and variable in time galaxies. They are rare, but future projects, such as the Large Synoptic Telescope, should be able to detect many such systems and raise hopes of reliable value.

The result provides another piece of the puzzle. But there is still work to be done: it still does not explain why the value derived from the cosmic microwave background is so low. The mystery remains, but not too long, hopefully. ![]()

James Geach, professor of astrophysics and academic researcher at the Royal Society University, Hertfordshire.

This article is republished from The Conversation under a Creative Commons license. Read the original article.

[ad_2]

Source link