[ad_1]

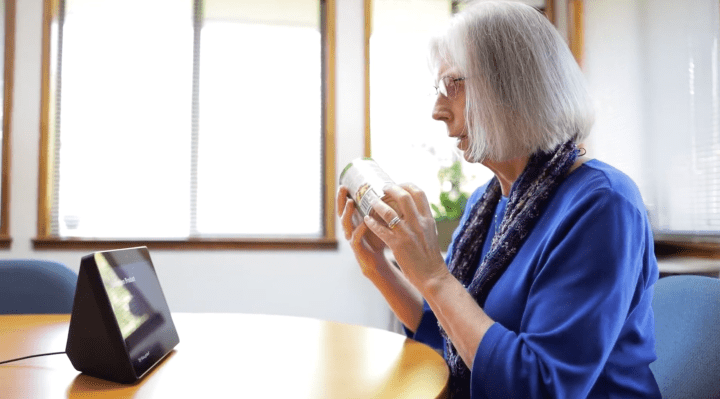

Amazon Alexa Show introduces a new feature designed to help blind and other visually impaired customers identify common household items from the pantry by placing them in front of Alexa's camera and asking them what it's all about. 39; is. The feature uses a combination of computer vision and machine learning techniques to recognize objects seen by Echo Show.

Echo Show is the version of the smart speaker powered by Alexa that tends to stay in the kitchens of customers because it helps them in other cooking tasks, such as setting timers, watching recipe videos, or listen to music or television while cooking.

But for blind users, the Salon will now have a new duty: to help them better identify household items that are difficult to distinguish by touch, such as cans, food boxes or spices, for example.

To use this feature, customers can simply say things such as "Alexa, what do I want?" Or "Alexa, what do I have in my hand? ". Alexa will also provide verbal and audio cues to help customers place the item in front of the device. camera.

Amazon explains that this feature was developed in collaboration with blind employees of Amazon, including its senior accessibility engineer, Josh Miele, who collected feedback from blind and visually impaired customers as part of the development process. The company has also worked with the Vista Center for the Blind in Santa Cruz on preliminary research, product development and testing.

"We heard that product identification could be a challenge and that customers wanted Alexa's help," said Sarah Caplener, Amazon's director of Alexa for Everyone. "Whether a customer sorts a grocery bag or tries to determine which item has been left on the counter, we want to simplify those moments by helping to identify those items and providing customers with the information they need." at that time "he said.

Smart home appliances and smart voice assistants such as Alexa have made life easier for people with disabilities by allowing them to adjust thermostats and lighting, lock doors, raise blinds, etc. With "Show and Tell", Amazon also hopes to reach the vast market of blind and visually impaired customers. According to the World Health Organization, about 1.3 billion people are reported to be visually impaired, according to Amazon.

That being said, Echo Peripherals are not available worldwide. Even if they are offered in a given country, they may not support the local language. Plus, the feature itself is American only at launch.

Amazon is not the only one to make accessibility a selling point for its smart speakers and its displays. This year, at the Google I / O Developer Conference, a series of accessibility projects were featured, including Live Caption, which transcribes audio in real time; Live Relay to help deaf people make phone calls; Project Diva, to help those who do not speak, uses intelligent assistants; and the Euphonia project, which helps speech recognition work for people with speech impairments.

Show and Tell is now available to Alexa users in the United States on first and second generation Echo Show devices.

[ad_2]

Source link