[ad_1]

According to the most recent data, the most cited researchers in the world form a strangely eclectic group. The Nobel laureates and prominent polymaths rub shoulders with less familiar names, such as Sundarapandian Vaidyanathan, originally from Chennai, India. What stands out from Vaidyanathan and hundreds of other researchers is that many of the quotes from their work come from their own articles, or from those of their co-authors.

Vaidyanathan, computer scientist at the institute of technology Vel Tech R & D, a private institute, is an extreme example: he received 94% of his quotes from himself or from his co-authors up to 2017, according to a study by PLoS Biology this month1. He is not alone. The dataset, which lists about 100,000 researchers, shows that at least 250 scientists have collected more than 50% of the quotes from themselves or their co-authors, while the median rate of Self-restraint is 12.7%.

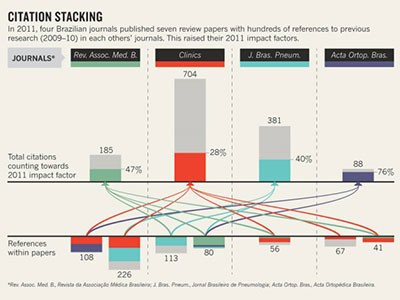

The study could help identify potential extreme self-proponents, and possibly "citation farms," in which groups of scientists cite themselves in droves, the researchers said. "I think self-citing farms are much more commonplace than we think," says John Ioannidis, a Stanford University, California physician specializing in meta-science – the study of how science is realized – and who led the work. "Those who have more than 25% self-citation are not necessarily engaged in unethical behavior, but further investigation might be needed," he says.

Data is by far the largest collection of auto-quote metrics ever published. And they come at a time when funding agencies, journals and others are focusing more on the potential problems caused by excessive self-indignation. In July, the Committee for Publication Ethics (COPE), a London-based publisher advisory body, pointed to extreme self-citation as one of the main forms of citation manipulation. This issue is part of broader concerns about over-reliance on citation indicators for making hiring, promotion and research funding decisions.

"When we combine professional advancement and pay undue attention to quotation-based metrics, we encourage self-citation," says psychologist Sanjay Srivastava of the University of Oregon at Eugene.

Although many scientists agree that excessive self-talk is a problem, there is little consensus on what is too much or what to do about the problem. This is partly because researchers have many legitimate reasons to quote their work or that of their colleagues. Ioannidis warns that his study should not lead to the defamation of some researchers for their rates of self-talk, especially because they can vary from one discipline to another and from one to another. 39, one career step to the next. "It just offers complete and transparent information. It should not be used for verdicts such as deciding that a self-quotation too high equals a bad scientist, "he says.

Data Reader

Ioannidis and his co-authors have not published their data to focus on self-citation. This is just a part of their study, which includes a multitude of standardized metrics based on citations for the more than 100,000 researchers most cited over the last two decades in 176 scientific subfields. He compiled the data with Richard Klavans and Kevin Boyack of the SciTech Strategies analysis company in Albuquerque, New Mexico, and Jeroen Baas, director of analytics at Elsevier's Amsterdam-based publisher; all data comes from Elsevier's exclusive Scopus database. The team hopes that its work will identify factors that can generate citations.

But the most interesting part of the dataset is the self-citation metric. It is already possible to see how often an author has cited his own work by consulting his references in subscription databases such as Scopus and Web of Science. But without a comprehensive view of research areas and career steps, it is difficult to put these numbers in context and compare one researcher to another.

Vaidyanathan's record is one of the most extreme – and he has brought some rewards. Last year, the Indian politician Prakash Javadekar, currently Minister of the Environment of the country, but at the time responsible for higher education, awarded Vaidyanathan a prize of 20,000 rupees ($ 280 US) for being among the best metric quote researchers. Vaidyanathan did not answer to NatureRequest for comment, but he has already defended his citation file in response to questions about Vel Tech posted on Quora, the online question and answer platform. In 2017, he wrote that, as research is an ongoing process, "the following work can not be done without reference to previous work," and that the self-quote was not made with the intention of misleading others.

Theodore Simos, a mathematician whose website lists the affiliations of King Saud University in Riyadh, the Ural Federal University in Yekaterinburg, Russia, and the Democritus University of Thrace in Komotini, Greece; and Claudiu Supuran, a chemist specializing in medicine at the University of Florence, Italy, who also enrolled an affiliation at King Saud University. The two Simos, who collected about 76% of his quotes from himself or from his co-authors, and Supuran (62%) were named last year on a list of 6,000 "world-class researchers selected for their outstanding research performance, "produced by Clarivate Analytics. , an information services company based in Philadelphia, Pennsylvania, which owns Web of Science. Neither Simos nor Supuran responded to NatureRequests for comments Clarivate stated that she was aware of the problem of unusual autocation patterns and that the methodology used to calculate her list could change.

What to do self-quotes?

In recent years, researchers have paid increased attention to self-citation. A pre-print of 2016, for example, suggested that university men cite their own articles, on average 56% more than university women.2, although last year a replication analysis suggested that this could be an effect of greater self-citation among productive writers of all genres, who have more prior work to quote3. In 2017, a study showed that Italian scientists began to quote themselves more heavily after the introduction of a controversial policy in 2010 forcing academics to reach the productivity thresholds to be eligible for promotion.4. And last year, the Indonesian Ministry of Research, which uses a citation-based formula to allocate funds for research and scholarship, said some researchers had used their practices with practices contrary to ethics, including excessive self-citations and groups of academics citing each other. The ministry said it had stopped funding 15 researchers and planned to exclude spontaneous citations from its formula, although researchers say Nature that this has not happened yet.

But the idea of publicly listing the rates of self-sacrifice, or evaluating them on the basis of corrected parameters for self-talk, is very controversial. For example, in a discussion paper published last month5COPE argued against the exclusion of spontaneous citations in statistics, as this "does not allow a nuanced understanding of when self-righteousness has a good scientific sense".

In 2017, Justin Flatt, a biologist at the University of Zurich in Switzerland, called for more clarity about the criminal record of scientists.6. Flatt, who is now at the University of Helsinki, suggested publishing a self-quote index, or s-index, along the lines of the h– productivity indicator used by many researchers. A h-index of 20 indicates that a researcher has published 20 articles with at least 20 citations; likewise s-index of 10 would mean that a researcher had published 10 articles each having received at least 10 self-citations.

Flatt, who received a grant to collect data for the s-index, agrees with Ioannidis that this type of work should not be focused on establishing thresholds for acceptable scores, nor on the designation and shame of oneself. "There has never been any question of criminalizing self-citations," he says. But as long as academics continue to promote themselves using the h-index, it is wise to include the s-index for context, argues he.

The context is important

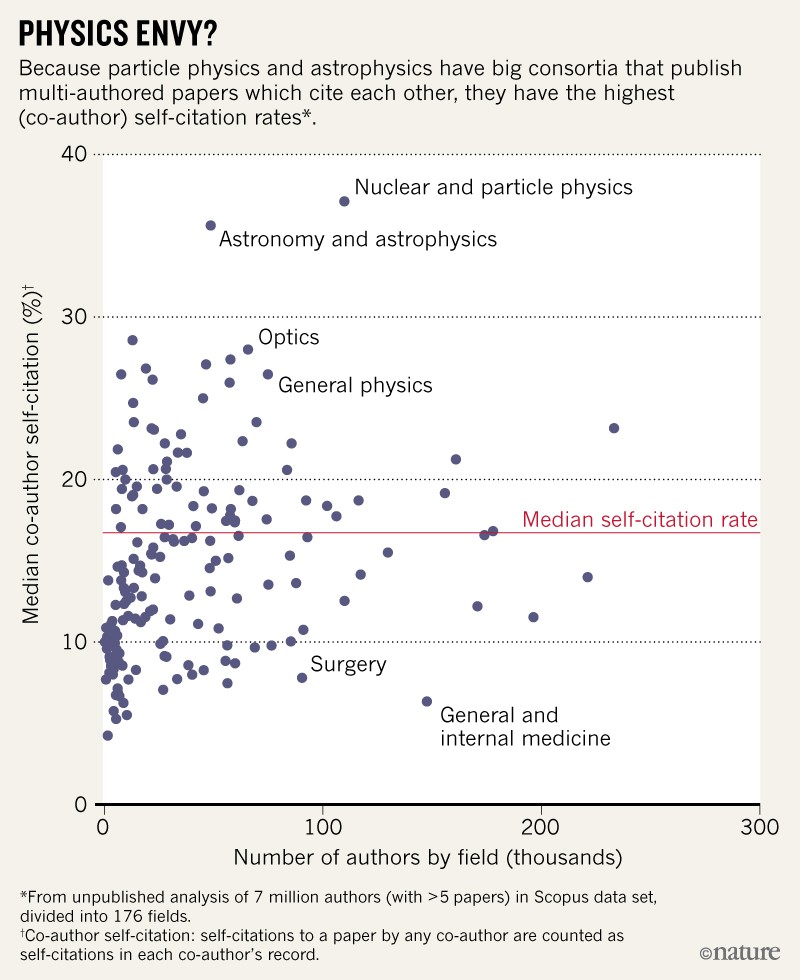

The Ioannidis study is characterized by a broad definition of self-talk, which includes quotations from coauthors. This is intended to catch possible examples of citing agriculture; However, this inflates the scores of self-talk, says Marco Seeber, sociologist at Ghent University in Belgium. Particle physics and astronomy, for example, often have articles with hundreds or even thousands of co-authors, which raises the average self-quote on the ground.

Ioannidis says that it is possible to take into account some systematic differences by comparing researchers to the average of their country, their career stage and their discipline. But more generally, he says, the list draws attention to cases that merit closer examination. And there is another way of detecting problems, by examining the relationship between the citations received and the number of articles in which these citations appear. Simos, for example, received 10,458 citations from just 1,029 articles – which means that on average he receives more than 10 citations in each article mentioning his work. Ioannidis says that this metric, when combined with the self-citation metric, is a good indicator for potentially excessive self-promotion.

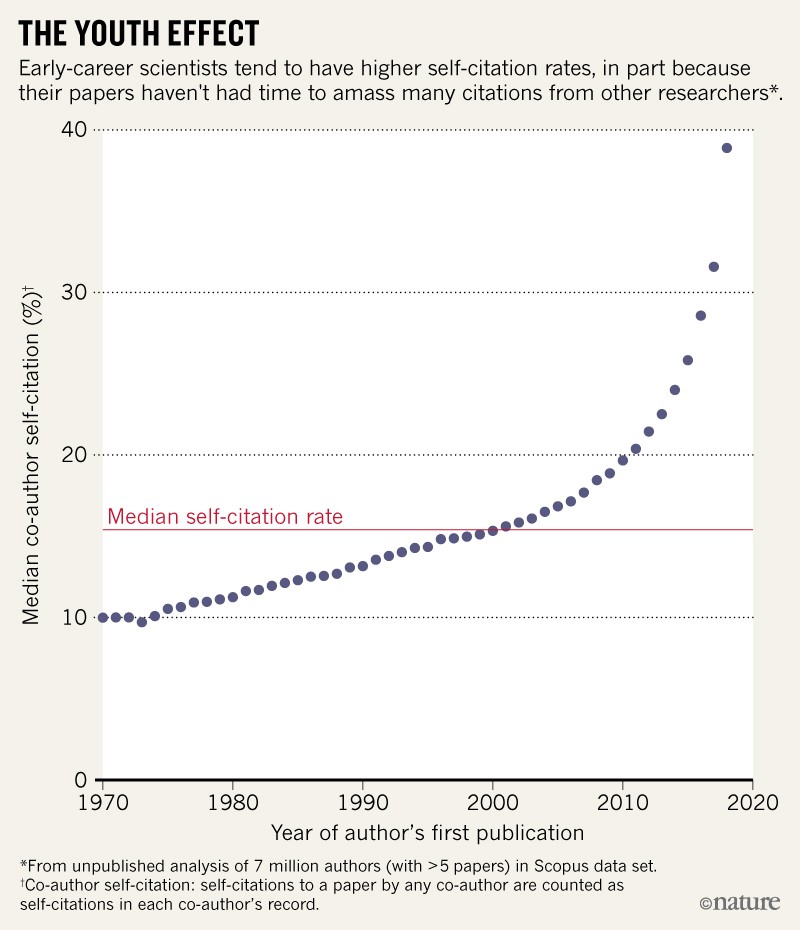

In his unpublished work, Elasvier Baas explains that he has applied a similar analysis to a much larger dataset of 7 million scientists: all the authors listed in Scopus have published more than 5 articles. According to Baas, in this dataset, the median rate of self-talk is 15.5%, but no less than 7% of authors have a self-healing rate above 40%. This proportion is much higher than among the most cited scientists, as many of the 7 million researchers cite few citations or are early in their careers. Early career scientists tend to have higher rates of self-talk because their articles have not had time to collect many other quotes (see "The Youth Effect").

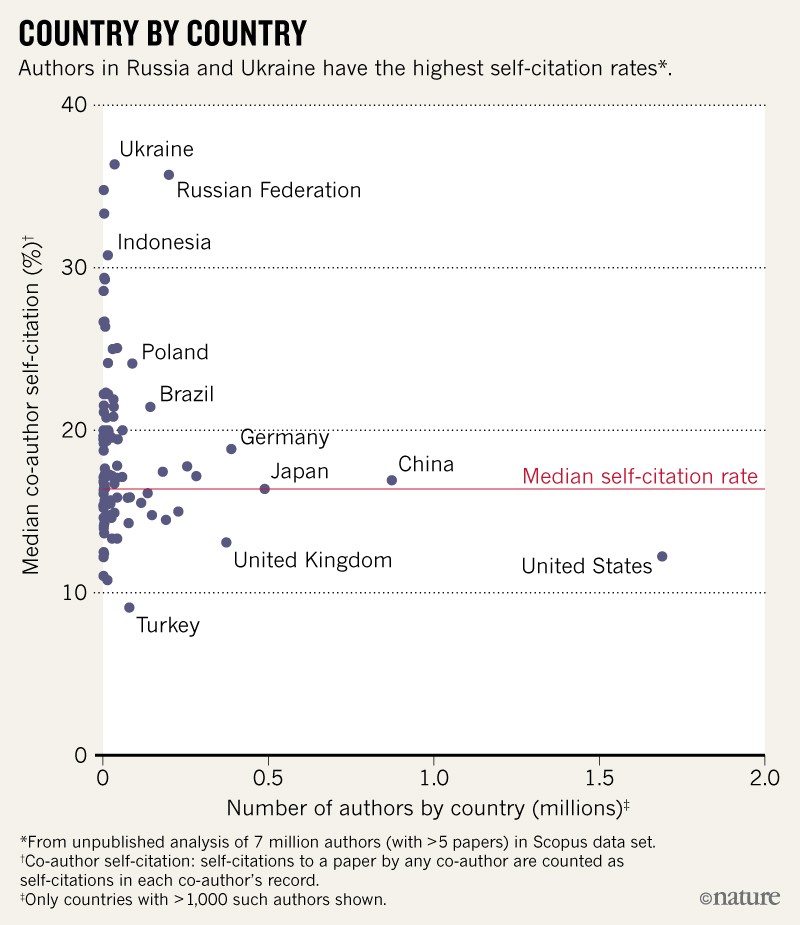

According to Baas data, Russia and Ukraine stand out with a high median rate of self-talk (see "Country by Country"). His analysis also shows that some areas remain – such as nuclear and particle physics, astronomy and astrophysics – because of their numerous articles to several authors (see "Want physics?"). Baas says that he does not plan to publish his data set.

Not good for science?

Although the PLoS Biology One study identifies extreme cases of self-evaluation and suggests ways to look for others. Some researchers claim that they are not convinced that the self-citation dataset will be helpful, in part because this measure varies tremendously depending on the research discipline and the stage of the career. "The self-citation is much more complex than it appears," says Vincent Larivière, a computer scientist at the University of Montreal in Canada.

Srivastava adds that the best way to combat excessive self-citation – and other citation-based indicators – is not necessarily to publish standardized tables and increasingly detailed composite metrics in order to compare the researchers with each other. These could have their own shortcomings, he says, and such an approach could lead scientists even further into a world of evaluation by individual metrics, the very problem that incites players to play.

"We should ask editors and proofreaders to look for inappropriate self-citations," Srivastava said. "And maybe some of these approximate measurements have the benefit of indicating where to look closer." But finally, the solution must be to realign the professional evaluation with the judgment of the peers, and not to double the metrics. "Cassidy Sugimoto, a computer scientist at Indiana University Bloomington, agrees that more metrics might not be the solution:" It's not good for science. "

Ioannidis, however, says that his work is necessary. "In any case, people are already relying heavily on individual metrics. The question is how to ensure that information is as accurate and compiled as carefully and systematically as possible, "he said. "Quote metrics can not and should not disappear. We should make the most of it, taking full account of their many limitations. "

[ad_2]

Source link