[ad_1]

TensorFlow – optimized for a TensorFlow – optimized for forensics inference in edge devices.

That's a bit of a word-salad to unpack, but here's the end result: Google is looking for a complete suite of customized hardware speech recognition, which it owns from the device all the way through the server. Google will have the cloud TPU (the third version of which will be rolled out) to handle training models for various machine learning-driven tasks, and then run the inference of that model that has a specialized chip that runs a lighter version of TensorFlow that doesn 't consume as much power.

Google is exploiting an opportunity to split the process of inference and machine training into two different sets of hardware and dramatically reduce the footprint of a device that actually captures the data. That would be more important, a more importantly, a dramatically smaller surface area for the current chip.

Google also is rolling out a new set of services to compile TensorFlow (Google 's machine learning development framework) into a lighter-weight version that can run on edge That, again, reduced the number of results, from safety (in autonomous vehicles) to a better user experience (voice recognition). As competition heats up in the space, both from the larger companies and from the emerging clbad of startups, these companies are going to be really important for larger companies. That's especially true for the future as well as the need for a Caffe2 and PyTorch.

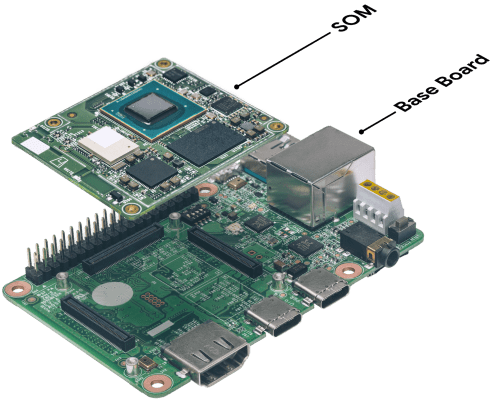

Google will be releasing the chip on a kind of modular board not so dissimilar to the Raspberry Pi, which will get you into the hands of developers and build unique use cases. But more importantly, it will help developers who are already working with TensorFlow as their primary machine learning framework. That could be successful, it would be successful, it would be more likely to go to Google's cloud ecosystem on both hardware (the TPU) and framework (TensorFlow) level. While Amazon owns most of the stack for cloud computing (with Azure being the other largest player), it looks like Google is looking for the next AI stack – and not just on demand GPUs as a stopgap to keep developers operating within that ecosystem.

Thanks to the proliferation of GPUs, machine learning has become more prevalent across a range of use cases, which does not require the horsepower to be a model to identify what a cat looks like. It also has the ability to take care of animals and animals. GPUs were great for the use of cameras, but it is clear that they are better equipped for the purpose of The edge-specialized TPU is an ASIC chip, a breed of chip architecture like Bitmain). The chips are made to do so well, and it is opened up to the potential of various niches, such as mining and cryptocurrency, with specific chips that are optimized for those calculations. These kinds of edge-focused chips tend to do a lot of low-precision calculations very fast, making the whole process of juggling runs between memory and the actual core much less complicated

While Google's entry in this arena has long been a whisper in the Valley, this is a stake in the ground that it wants to own everything from the hardware to the end-user experience, pbading through the development layer and others the way there. It is not necessary to alter the calculus of the ecosystem, but it is a development of a playground for developers, Google still has to make an effort to get the hardware designed other not just its own, but it wants to rule the ecosystem. That's easier than done, even if it's a little bit more of a business, but it's a lot of ramifications than it's ramifications.

Source link