[ad_1]

To get cancer earlier, we need to predict who will get it in the future. The complex nature of risk forecasting has been enhanced by artificial intelligence (AI) tools, but the adoption of AI in medicine has been constrained by poor performance on new patient populations and neglect of healthcare professionals. racial minorities.

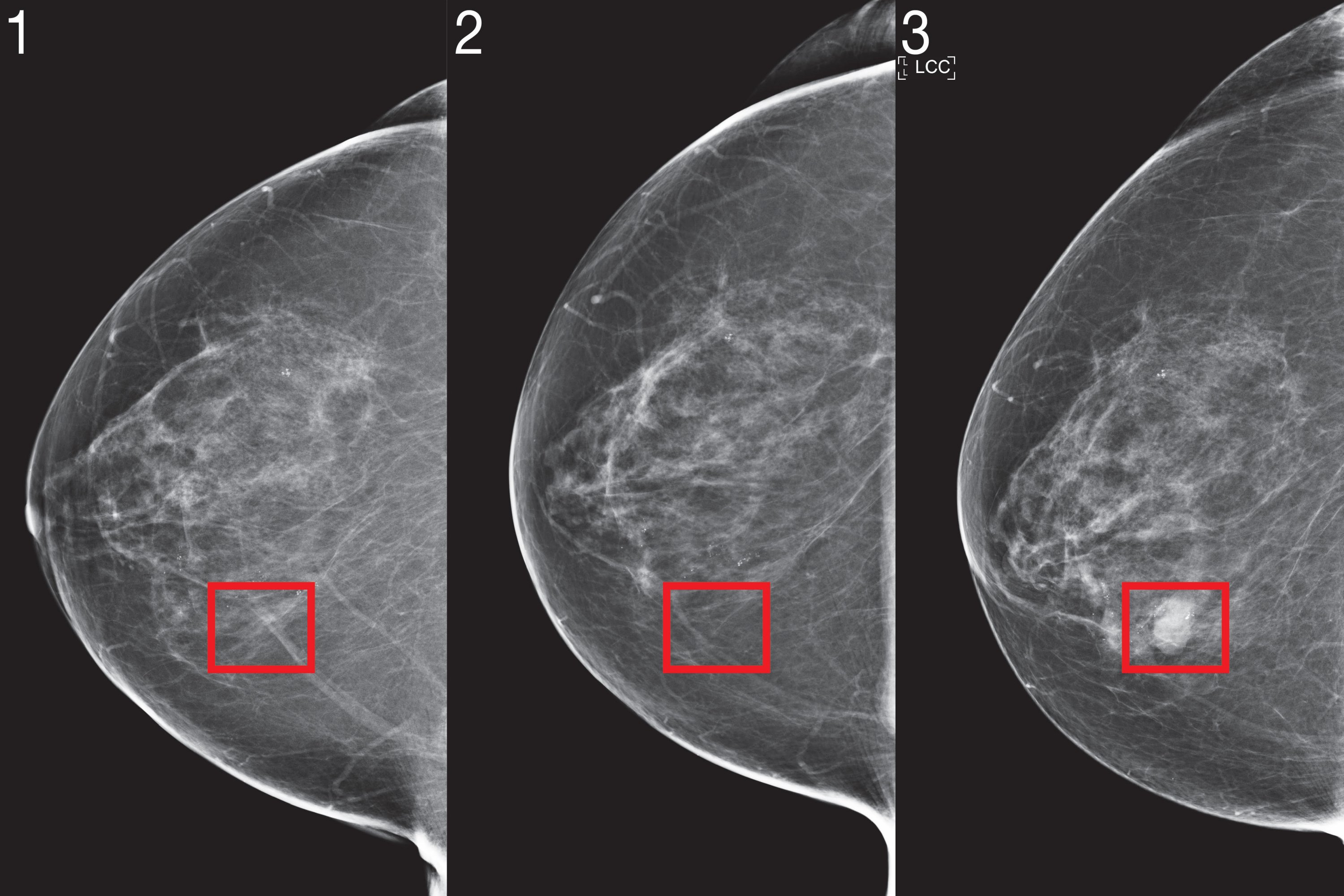

Two years ago, a team of scientists from MIT’s Computing and Artificial Intelligence Laboratory (CSAIL) and Jameel Clinic (J-Clinic) demonstrated a deep learning system to predict cancer risk. using only a patient’s mammogram. The model showed great promise and even improved inclusiveness: it was equally accurate for white and black women, which is especially important given that black women are 43% more likely to die from cancer. breast.

But to integrate image-based risk models into clinical care and make them widely available, the researchers say the models required both algorithmic improvements and large-scale validation at multiple hospitals to prove their robustness.

To this end, they adapted their new “Mirai” algorithm to capture the unique requirements of risk modeling. Mirai jointly models a patient’s risk over several future time periods, and may potentially benefit from clinical risk factors such as age or family history, if available. The algorithm is also designed to produce consistent predictions for minor variations in clinical environments, such as the choice of mammography machine.

The team trained Mirai on the same dataset of over 200,000 Massachusetts General Hospital (MGH) exams from their previous work and validated it on MGH test sets, from the Karolinska Institute in Sweden. and Chang Gung Memorial Hospital in Taiwan. Mirai is now installed at the MGH and the team’s collaborators are actively working to integrate the model into care.

Mirai was much more accurate than previous methods in predicting cancer risk and identifying high-risk groups in all three data sets. Comparing the high-risk cohorts on the MGH test set, the team found that their model identified nearly twice as many future cancer diagnoses compared to the current clinical standard, the Tyrer-Cuzick model. Mirai was also accurate in patients of different races, age groups and breast density categories in the MGH test set, and across different cancer subtypes in the Karolinska test set.

“Improved breast cancer risk models allow targeted screening strategies that allow earlier detection and less screening damage than existing guidelines,” says Adam Yala, CSAIL doctoral student and lead author of a paper on Mirai which was posted this week in Scientific translational medicine. “Our goal is to incorporate these advances into the standard of care. We are partnering with clinicians from Novant Health in North Carolina, Emory in Georgia, Maccabi in Israel, TecSalud in Mexico, Apollo in India, and Barretos in Brazil to further validate the model on various populations and study the best way to implement it clinically.

How it works

Despite the widespread adoption of breast cancer screening, researchers say the practice is fraught with controversy: more aggressive screening strategies aim to maximize the benefits of early detection, while less frequent screenings aim to reduce false positive, anxiety and the costs for them. who will never even develop breast cancer.

Current clinical guidelines use risk models to determine which patients should be referred for additional imaging and MRI. Some guidelines use age-correct risk models to determine if and how often a woman should get tested; others combine several factors related to age, hormones, genetics and breast density to determine other tests. Despite decades of efforts, the accuracy of risk models used in clinical practice remains modest.

Recently, risk models based on deep learning mammography have shown promising performance. To bring this technology to the clinic, the team identified three innovations that they consider essential for risk modeling: joint modeling time, the optional use of non-image risk factors and methods to ensure consistent performance in clinical settings.

Once

Risk modeling is inherent in learning patients with different follow-ups and assessing risk at different times: this can determine how often they are screened, whether they need to undergo additional imaging or even consider treatments. preventive.

Although it is possible to train separate models to assess the risk for each point in time, this approach can lead to risk assessments that do not make sense – such as predicting that a patient has a higher risk of develop cancer within two years than within five years. . To address this, the team designed their model to predict risk at all times simultaneously, using a tool called the “additive risk layer”.

The additive risk layer works as follows: Their network predicts a patient’s risk at one point in time, say five years, as an extension of their risk to the previous point, like four years. In doing so, their model can learn from the data with varying amounts of tracking, and then produce self-consistent risk assessments.

2. Risk factors unrelated to the image

While this method primarily focuses on mammograms, the team also wanted to use non-image risk factors such as age and hormonal factors if they were available – but did not require them at the time of referral. test. One approach would be to add these factors as an input to the model with the image, but this design would prevent the majority of hospitals (such as Karolinska and CGMH), which do not have this infrastructure, from using the model.

So that Mirai can benefit from the risk factors without needing them, the network predicts this information at the time of training, and if it’s not there, it can use its own predictive version. Mammograms are a rich source of health information, and many traditional risk factors such as age and menopausal status can be easily predicted from their imaging. Because of this design, the same model could be used by any clinic in the world, and if they have this additional information, they can use it.

3. Consistent performance in clinical environments

To incorporate deep learning risk models into clinical guidelines, the models must operate consistently in various clinical environments, and its predictions cannot be affected by minor variations such as the machine on which the mammogram was taken. carried out. Even at a single hospital, scientists found that standard training did not produce consistent predictions before and after a change in mammography machines because the algorithm could learn to rely on different cues specific to the environment. To skew the model, the team used a contradictory system where the model specifically learns mammographic representations that are invariant to the source clinical environment, to produce consistent predictions.

To further test these updates in various clinical settings, scientists evaluated Mirai on new test sets from Karolinska in Sweden and Chang Gung Memorial Hospital in Taiwan, and found that he achieved consistent performance. The team also analyzed the model’s performance across breeds, ages, and breast density categories in the MGH test set, and across cancer subtypes on the Karolinska data set, and found it to function similarly across all subgroups.

“African-American women continue to have breast cancer at a younger age and often in more advanced stages,” says Salewai Oseni, breast surgeon at Massachusetts General Hospital who was not involved in the work. “This, together with the higher case of triple negative breast cancer in this group, resulted in increased breast cancer mortality. This study demonstrates the development of a risk model whose prediction presents a notable precision according to the race. The opportunity for its clinical use is high. ”

Here’s how Mirai works:

1. The mammogram image is subjected to something called an “image encoder”.

2. Each image representation, along with the view it originated from, is aggregated with other images from other views to obtain a representation of the entire mammogram.

3. With mammography, a patient’s traditional risk factors are predicted using a Tyrer-Cuzick model (age, weight, hormonal factors). If they are not available, the predicted values are used.

4. With this information, the additive risk layer predicts a patient’s risk for each year over the next five years.

Improve Mirai

While the current model does not look at any of the patient’s previous imaging results, imaging changes over time hold a wealth of information. Going forward, the team aims to create methods that can effectively utilize a patient’s full imaging history.

Likewise, the team notes that the model could be further improved by using “tomosynthesis”, an X-ray technique for screening patients with asymptomatic cancer. Beyond improving accuracy, more research is needed to determine how to adapt image-based risk models to different mammography machines with limited data.

“We know that MRI can detect cancers earlier than mammography and that earlier detection improves patient outcomes,” says Yala. “But for patients at low risk for cancer, the risk of false positives may outweigh the benefits. With improved risk models, we can design more nuanced risk screening guidelines that offer more sensitive screening, like MRI, to patients who will develop cancer, to achieve better outcomes while reducing unnecessary screening. and over-processing for the rest.

“We are both delighted and humbled to pose the question of whether this AI system will work for African American populations,” says Judy Gichoya, MD, MS and assistant professor of interventional radiology and computer science at the Emory University, which was not involved in the work. “We are studying this issue and how to detect failures in depth.”

Yala wrote the article on Mirai alongside MIT Research Specialist Peter G. University, Leslie Lamb of MGH, Kevin Hughes of MGH, Senior Author and Professor at Harvard Medical School Constance Lehman of MGH, and Senior Author and professor at MIT Regina Barzilay.

The work was supported by grants from Susan G Komen, the Breast Cancer Research Foundation, Quanta Computing, and the MIT Jameel Clinic. It was also supported by Chang Gung Medical Foundation Grant and by Stockholm Läns Landsting HMT Grant.

[ad_2]

Source link