[ad_1]

A Twitter image-cropping algorithm that went viral last year when users discovered it preferred whites over blacks was also coded with an implicit bias against a number of other groups, researchers found.

The researchers examined how the algorithm, which automatically edited photos to focus on people’s faces, treated a variety of different people and found that Muslims, people with disabilities, and the elderly face similar discrimination. The same artificial intelligence had learned to ignore people with white or gray hair, who wore the headscarf for religious or other reasons, or who used wheelchairs, the researchers said.

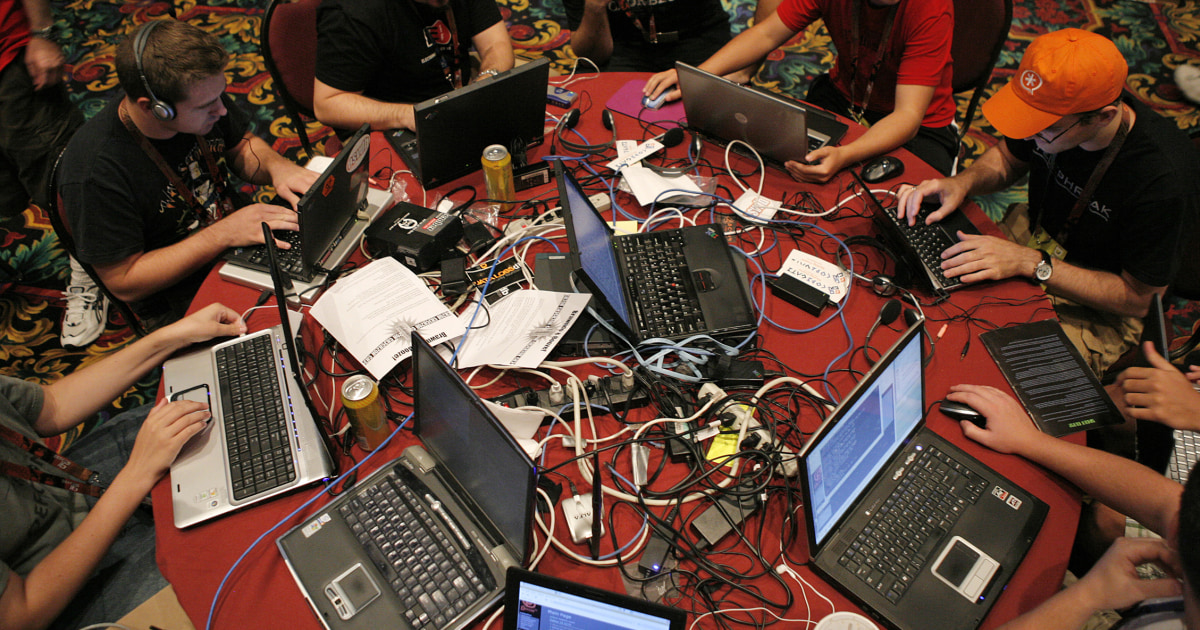

The results were part of a one-of-a-kind contest hosted by Twitter this weekend at the Def Con hacker conference in Las Vegas. The company called on researchers to discover new ways to prove that an image cropping algorithm was inadvertently coded to be biased against particular groups of people. Twitter distributed cash prizes, including $ 3,500 to the winner and small amounts to the finalists.

This is a unique step in what has become an important area of research: finding ways in which automated systems formed by existing data resources have imbibed existing prejudices in society.

The image cropping algorithm, which has since been largely taken down by Twitter, went viral last September. Users noticed that when they tweeted photos that included both a white person and a black person, it almost always automatically highlighted the white person. With photos that included both former President Barack Obama and Sen. Mitch McConnell, R-Ky., for example, Twitter would invariably choose to crop the photo to show only McConnell. The company apologized at the time and took it offline.

But this unintentional racism was not unusual, said Parham Aarabi, a professor at the University of Toronto and director of its Applied AI Group, which studies and consults on bias in artificial intelligence. Programs that learn from user behavior almost invariably introduce some sort of unintended bias, he said.

“Almost every major AI system that we’ve tested for big tech companies, we find significant biases,” he said. “Bias AI is one of those things that no one has really tested, and when you do, just like discovering Twitter, you will find that there are major biases across the board. “

While the photo-cropping incident has been embarrassing for Twitter, it has brought to light a vast problem in the tech industry. When companies train artificial intelligence to learn from their users’ behavior – such as seeing what kind of photos the majority of users are most likely to click – AI systems can internalize biases they would never intentionally write. in a program.

Team Aarabi’s submission to the second-place Twitter contest revealed that the algorithm was severely biased against people with white or gray hair. The more artificially lightened their hair, the less likely the algorithm is to choose them.

“The most common effect is that it eliminates older people,” Aarabi said. “The older population is mainly marginalized with the algorithm.”

Competitors found a plethora of ways the cropping algorithm could be biased. The Aarabi team also found that in photos of a group of people where one person was lower because they were in a wheelchair, Twitter was likely to crop the wheelchair user in the photo.

Another submission revealed that Twitter would be more likely to ignore people who wore headgear, which effectively cut off people who wore them for religious reasons, such as a Muslim hijab.

The winning entry revealed that the more photoshoped a person’s face is to appear thinner, younger, or softer, the more likely Twitter’s algorithm will highlight them.

Rumman Chowdhury, director of the Machine Learning Ethics Twitter team, which organized the competition, said she hoped more tech companies would seek outside help in identifying algorithmic biases.

“Identifying all the ways that an algorithm can go wrong when made public is really a daunting task for a team, and frankly probably not doable,” said Chowdhury.

“We want to set a precedent on Twitter and in the industry for the proactive and collective identification of algorithmic damage,” she said.

[ad_2]

Source link