[ad_1]

As always, the camera system is one of the most important features of new iPhones. Apple's senior vice president of product marketing, Phil Schiller, played the same role as every iPhone ad, breaking down camera technology and explaining improvements. This year he went so far as to declare that the iPhone XS will hail "a new era of photography".

But the days when Apple held a big lead over every Android manufacturer are long gone. Google's innovative approach to computational photography with the Pixel line means they are now the phones to beat in terms of pure image quality, while competitors like the Samsung Galaxy S9 Plus and the P20 Pro from Huawei Admittedly, the iPhone 8 and X have good cameras, but it's hard to prove they're the ones better. Can the iPhone XS catch up?

The biggest hardware upgrade this year is the new 12 megapixel larger sensor. Last year, Apple said the 8 and X sensor was "bigger" than the 7, but the disassembly revealed that it was not really true; the field of view and the focal length of the lens have not changed. This time, however, Apple cites the increase in pixel size, which should indeed make the difference.

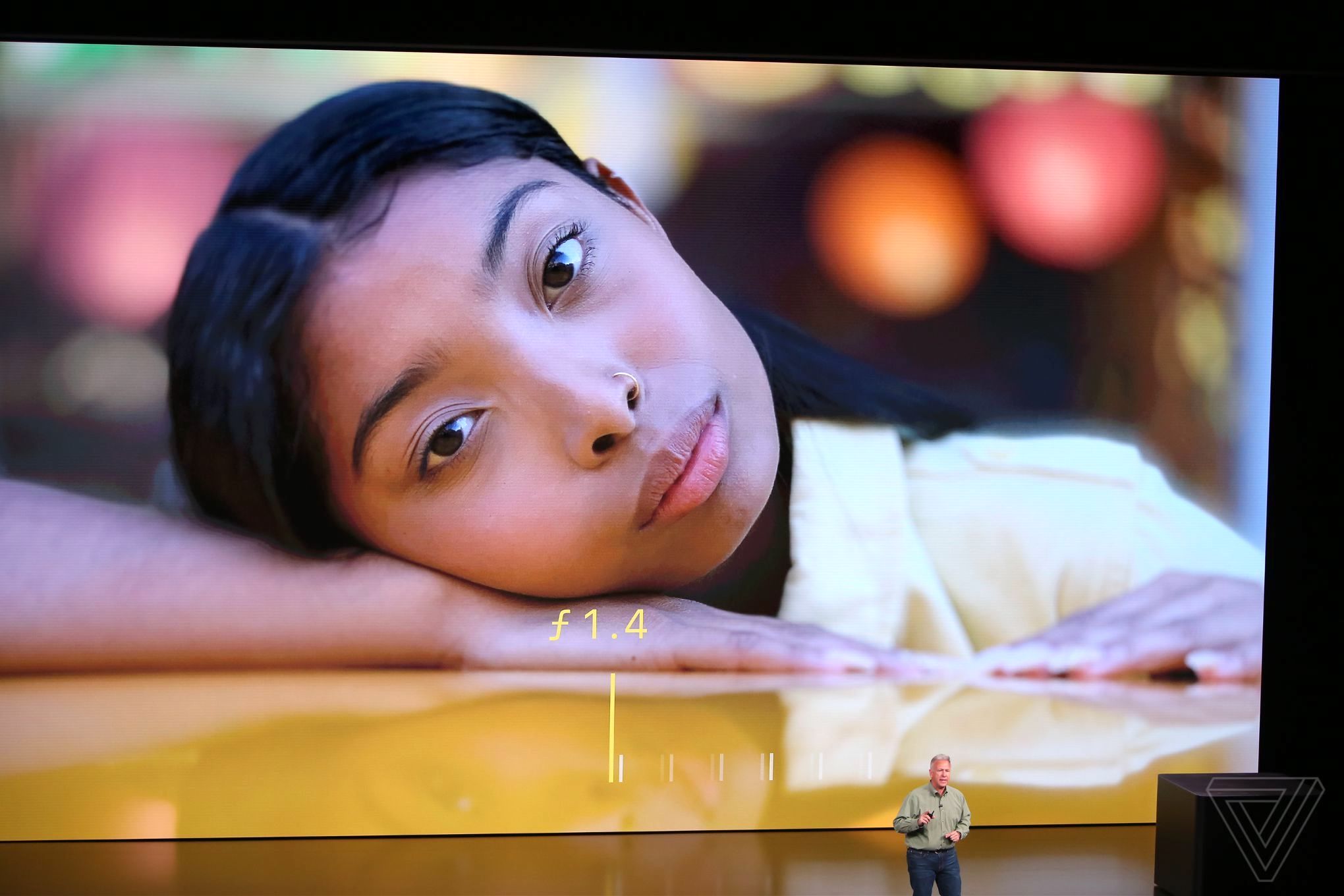

The main camera of the iPhone XS has pixels of 1.4 microns, against 1.22 microns in the iPhone X and tied with the Google Pixel 2. Plus the pixels are big, the more their ability to collect light is important. a photo. This is the first time that Apple has been increasing the pixel size since the iPhone 5S, after dropping to 1.22 μm when it went up to 12 megapixels with the 6S, which could represent a major upgrade.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/13052277/Screen_Shot_2018_09_13_at_12.16.12.png)

Otherwise, the hardware is pretty much the same as on the X. There is always a f / 1.8 six-element lens and a f / 2.4 secondary telephoto lens on the XS and the XS Max, though the optics had to be redesigned for the new sensor. (The cheaper iPhone XR has the same main camera but no telephoto.) Apple also claims that the True Tone flash is improved without providing details. And the selfie camera is unchanged beyond the "completely new video stabilization".

But as Schiller said on stage, the material is just part of the history of camera technology. Computational photography techniques and software design are at least as important when it comes to getting great pictures of phone optics.

The A12 Bionic chip of the iPhone XS is designed for this basic use case. This year, Apple has directly connected the image signal processor to the Neural Engine, the term used by the company to designate the part of the chip designed for machine learning and AI. This seems to be a big goal with the A12. It will be the first 7-nanometer processor in the world to be delivered on a smartphone, which should allow more efficient performance, but Apple is only citing conventional speed increases of up to 15% compared to the A11; The machine learning operations are significantly more dramatic, suggesting that Apple has taken advantage of the possibility of a 7nm design to focus on neural engine development.

What this means for photography is that the camera will understand better what it looks. Schiller has identified use cases as facial tracking, where the camera can find a map of your subject's face to remove unwanted effects such as red eyes and segmentation. The camera can analyze the relationship of complex subjects with the focal plane. of field.

Apple is also introducing a new digital photography feature called Smart HDR. This sounds like Google's excellent HDR + technique on Pixel phones, although Google relies on the combination of several underexposed images while Apple also uses overexposure to capture shadow detail. Apple says the feature relies on the fastest sensor and the new image signal processor present in the iPhone XS.

The other main update of the iPhone's camera software is how it handles bokeh, the quality of the out-of-home areas of a photo. Apple says it has thoroughly researched high-end cameras and lenses to make bokeh look more realistic in portrait mode, and a new feature called Depth Control lets you adjust the degree of blur after taking a photo. . Similar functionality is available on phones such as Galaxy Note 8, as well as existing iPhones via third-party applications that use deep information APIs.

The Portrait mode of the iPhone XS still uses the secondary camera, which offers a more natural and blurred perspective for the images of people than the lens solution used by the Pixel. The iPhone XR single camera, however, has the same functions of control of bokeh and depth.

According to Apple, the iPhone XS could be the biggest iPhone camera update in a while, despite the lack of obvious features or eye-catching hardware. The examples of photos on the Apple site are certainly impressive. But Apple puts iPhone photos on billboards since the iPhone 6 – the real test will happen when the XS will be in the hands of millions of regular users.

HDR + being the main advantage of Google, the performance of Smart HDR will be decisive in the ability of the iPhone XS to compete with the Pixel. Apple seems to be saying the right things, but Google has a head start – as Marc Levoy said The edge, his system improves thanks to automatic learning without anyone touching him. This is before considering what improvements have been made to Pixel 3, which has sunk a lot, and which should land in less than a month.

However, if the iPhone XS could even match the basic image quality of last year's Pixel 2, it would be a significant and immediately noticeable improvement for every iPhone user. This means that this year, it is imperative for Apple to deliver the camera if it does not want to fall behind. And with availability starting next Friday, we will not have to wait to find out exactly what the world's most popular camera maker has come up with.

Source link