[ad_1]

Deepfakes, fake ultra-realistic videos manipulated with the aid of machine learning, are rather convincing. And researchers continue to develop new methods to create these types of videos, for better or more likely, for worse. The most recent method comes from researchers at Carnegie Mellon University, who have found a way to automatically transfer "style" from one person to another.

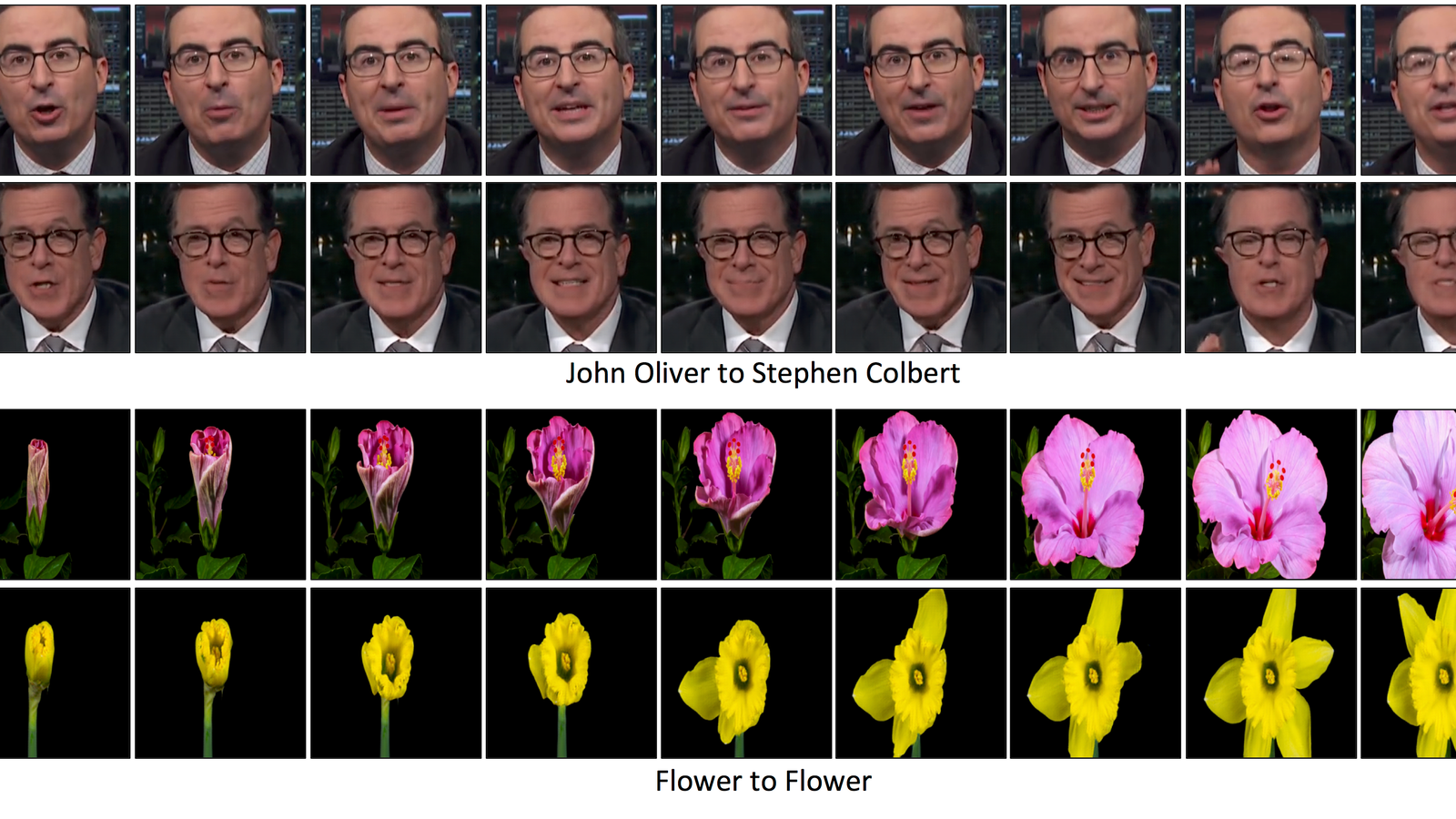

"For example, the style of Barack Obama can be transformed into Donald Trump," wrote the researchers in a description of a video on YouTube highlighting the results of this method. The video shows John Oliver's facial expressions transferred to Stephen Colbert and an animated frog, from Martin Luther King, Jr. to Obama, and Obama to Trump.

The first example seen – Oliver to Colbert – is far from being the most realistic manipulated video there is. It looks weak, some facial features being blurred in some places. It's almost as if you were trying to broadcast an interview from the Internet, but you have an incredibly weak wifi. The other examples (excluding the frog) are certainly more convincing, showing the deepfake reflecting the facial expression and the mouth movements of the original subject.

Researchers describe the process in an article as an "unsupervised data driven approach". Like other methods of developing deepfakes, this one uses artificial intelligence. The paper does not deal exclusively with the translation of speaking style and facial movements from one human to another – it also includes examples of blooming flowers, sunrises and sunsets, clouds and winds. For people to people, the researchers cite examples of transference in some ways, including "smiling John Oliver's dimple, Donald Trump's characteristic mouth shape, and Stephen Colbert's facial lips and smile." The team used videos available to the public to develop these deepfakes.

It's easy to see how these techniques can be applied in a more harmless way. The example of John Oliver and the cartoon frog, for example, indicates a potentially useful tool for developing realistic anthropomorphic animations. But as we have seen, the proliferation of increasingly realistic deepfakes has consequences – and provides the wrong actors with tools that make them inexpensive and easy to create. Over time, they can mislead the public in a dangerous way and can be a harmful tool for political propaganda.

Source link