[ad_1]

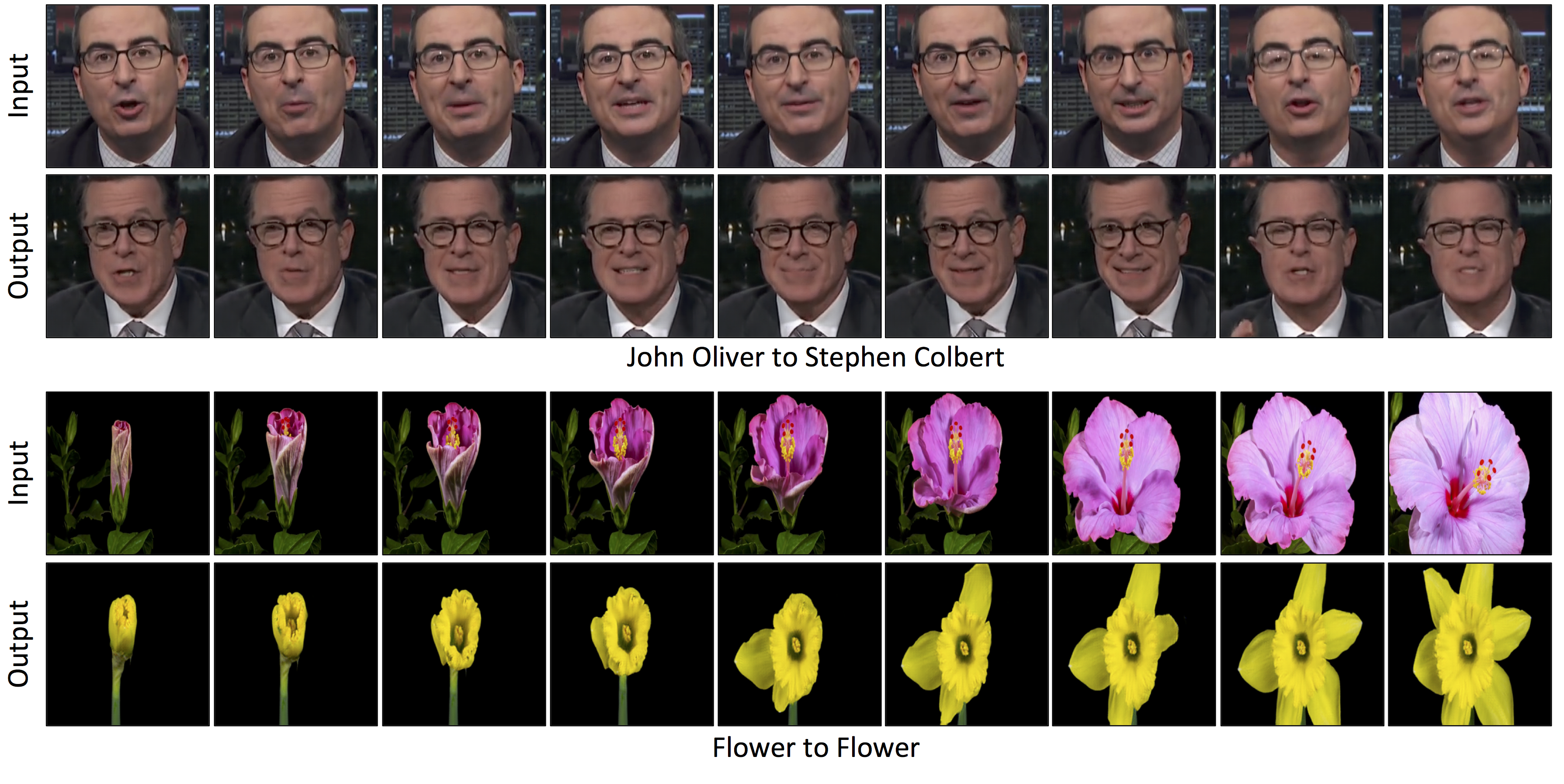

Researchers from Carnegie Mellon University have created a method to turn a video into the style of another. Although it may seem a little blurry at first glance, take a look at the video below. In this paper, the researchers took an entire clip of John Oliver and made it look like Stephen Colbert say it. In addition, they were able to mimic the movement of a flower opening with another flower.

In short, they can make someone believe (or anything) that they are doing something that they have never done.

"I think there are a lot of stories to tell," said Dr. Phu. student Aayush Bansal. He and the team created the tool to facilitate the shooting of complex films, perhaps by replacing the movement with simple and well-lit scenes and copying it in a completely different style or environment. .

"It's a tool for the artist that gives them an initial model that they can then improve," he said.

The system uses something called generative contradictory networks (GANs) to move one image style over another without a lot of corresponding data. GANs, however, create many artifacts that can ruin the video as it is read.

In a GAN, two models are created: a discriminator that learns to detect what is consistent with the style of an image or a video, and a generator that learns to create images or videos corresponding to a certain style. When both work competitively – the generator trying to fool the discriminator and the discriminator evaluating the efficiency of the generator – the system finally learns how the content can be transformed into a certain style.

Researchers have created something called Recycle-GAN that reduces imperfections by "not only spatial but also temporal information".

"This additional information, taking into account changes over time, further limits the process and produces better results," the researchers wrote.

It is obvious that Recycle-GAN can be used to create what is called Deepfakes, allowing malicious people to simulate someone saying or doing something that they do not. Have never done. Bansal and his team are aware of the problem.

"This opened the door for everyone on the ground that such fakes would be created and have such an impact. It will be important to find ways to detect them, "said Bansal.

Source link