[ad_1]

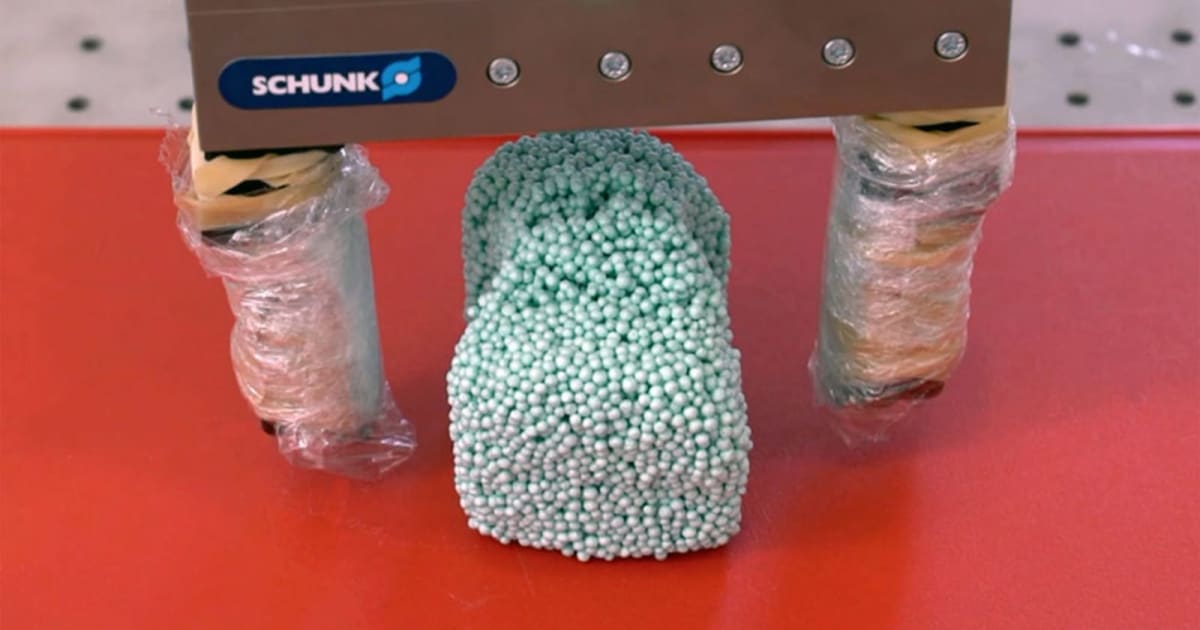

The team demonstrated its system by loading a two-finger robot, RiceGrip, to turn the deformable foam into a desired shape, as if you could craft sushi. He used a depth camera and an object recognition to identify the foam, then the model to consider the foam as a dynamic graph for deformable materials. Even though he already had some idea of how the particles would react, he would adjust his model if the "sushi" behaved in an unexpected way.

It is still early and scientists want to improve their approach by using partially observable situations (for example, knowing how to drop a bunch of boxes, and they would like it to work directly with images.) If and when that happens, it could represent a breakthrough for robots, as they would be easier to handle virtually any type of object, even when liquids or soft solids could make the results difficult to determine in advance. learning method makes the perspective a lot more realistic.

[ad_2]

Source link