[ad_1]

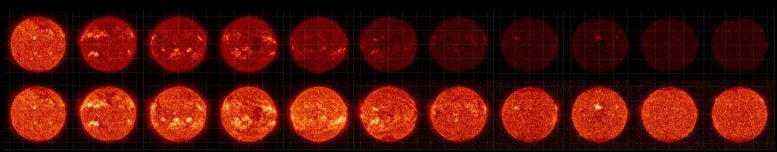

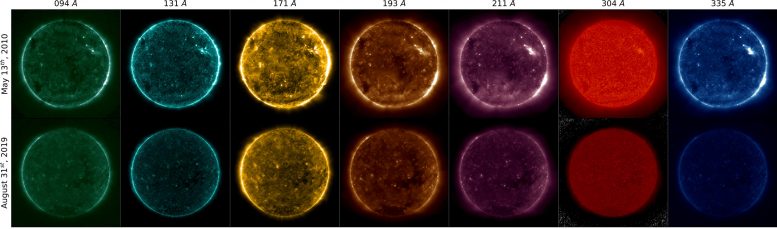

The top row of images shows the degradation of AIA’s 304 Angstrom wavelength channel over the years since SDO’s launch. The bottom row of images is corrected for this degradation using a machine learning algorithm. Credit: Luiz Dos Santos / NASA GSFC

A group of researchers are using artificial intelligence techniques to calibrate some of NASA’s images of the Sun, helping to improve the data scientists use for solar research. The new technique was published in the journal Astronomy & Astrophysics April 13, 2021.

A solar telescope has a tough job. Watching the sun takes its toll, with constant bombardment by an endless stream of solar particles and intense sunlight. Over time, the sensitive lenses and sensors of solar telescopes begin to degrade. To ensure that the data returned from these instruments is always accurate, scientists periodically recalibrate to make sure they understand exactly how the instrument is changing.

Launched in 2010, NASA’s Solar Dynamics Observatory, or SDO, has been providing high-definition images of the Sun for more than a decade. His images have given scientists detailed insight into various solar phenomena that can trigger space weather and affect our astronauts and technology on Earth and in space. The Atmospheric Imaging Assembly, or AIA, is one of two imaging instruments on SDO and is constantly staring at the Sun, taking images at 10 wavelengths of ultraviolet light every 12 seconds. This creates a wealth of information about the Sun unlike any other, but – like all instruments for observing the Sun – AIA degrades over time and the data must be frequently calibrated.

This image shows seven of the ultraviolet wavelengths observed by the Atmospheric Imaging Assembly aboard NASA’s Solar Dynamics Observatory. The top row contains observations taken from May 2010 and the bottom row shows observations from 2019, without any corrections, showing how the instrument has degraded over time. Credit: Luiz Dos Santos / NASA GSFC

Since SDO’s launch, scientists have used sounding rockets to calibrate AIA. Sounding rockets are smaller rockets that typically carry only a few instruments and perform short flights into space – typically only 15 minutes. Importantly, sounding rockets fly over most of Earth’s atmosphere, allowing onboard instruments to see the ultraviolet wavelengths measured by AIA. These wavelengths of light are absorbed by the Earth’s atmosphere and cannot be measured from the ground. To calibrate the AIA, they would attach an ultraviolet telescope to a sounding rocket and compare that data to the AIA measurements. Scientists can then make adjustments to account for any changes in the AIA data.

The sounding rocket calibration method has certain drawbacks. Sounding rockets can only be launched that often, but AIA is constantly watching the Sun. This means that there is a downtime where the calibration is slightly offset between each sounding rocket calibration.

“This is also important for deep space missions, which will not have the ability to calibrate a sounding rocket,” said Dr Luiz Dos Santos, solar physicist at NASA’s Goddard Space Flight Center in Greenbelt. , Maryland, and lead author of the article. “We are tackling two problems at the same time.

Virtual calibration

With these challenges in mind, the scientists decided to explore other options for calibrating the instrument, with a view to constant calibration. Machine learning, a technique used in artificial intelligence, seemed like a perfect fit.

As the name suggests, machine learning requires a computer program, or algorithm, to learn how to perform its task.

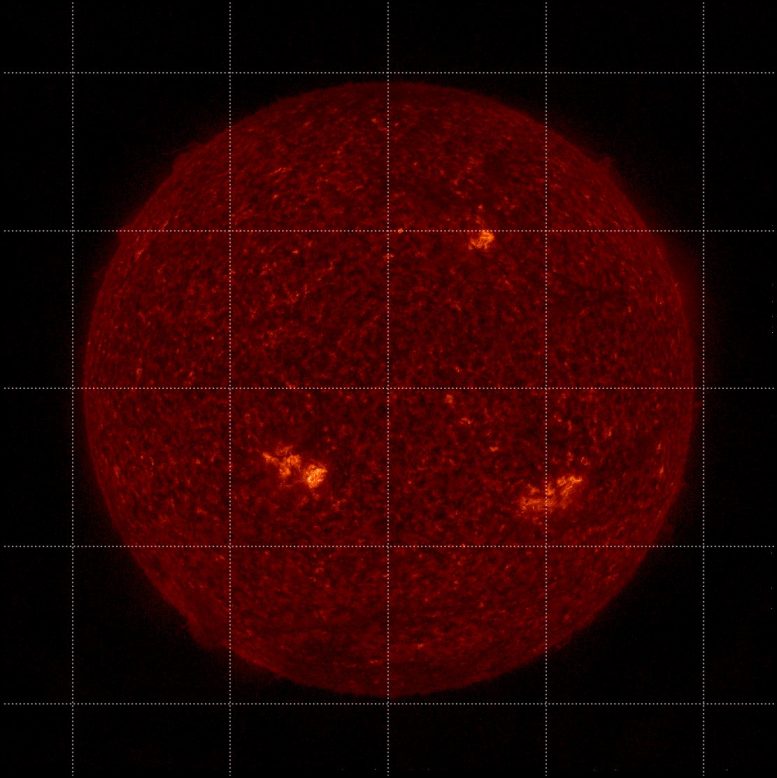

Sun seen by AIA in a 304 Angstrom light in 2021 before degradation correction (see image below with corrections from a rocket-sounding calibration). Credit: NASA GSFC

First, the researchers had to train a machine learning algorithm to recognize solar structures and how to compare them using AIA data. They do this by giving the algorithm images from sounding rocket calibration flights and telling it the right amount of calibration they need. After enough of these examples, they give the algorithm similar pictures and see if it would identify the correct calibration needed. With enough data, the algorithm learns to identify the amount of calibration needed for each image.

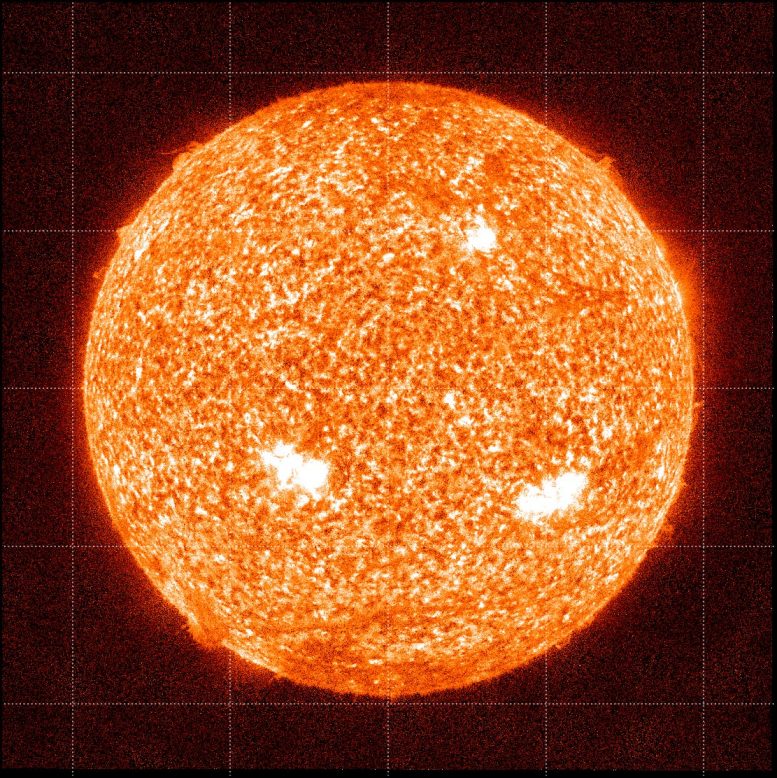

Sun seen by AIA in a 304 Angstrom light in 2021 with corrections from a sounding rocket calibration (see previous image above before degradation correction). Credit: NASA GSFC

Because AIA looks at the Sun in multiple wavelengths of light, researchers can also use the algorithm to compare specific structures across wavelengths and strengthen its assessments.

To begin with, they would teach the algorithm what a solar flare looked like by showing it solar flares on all AIA wavelengths until it recognized solar flares in all types of light. Once the program can recognize a solar flare without any degradation, the algorithm can then determine the extent of degradation affecting the current AIA images and the amount of calibration needed for each.

“It was the big thing,” Dos Santos said. “Instead of just identifying it on the same wavelength, we are identifying structures across wavelengths.”

This means that researchers can be more confident about the calibration identified by the algorithm. Indeed, by comparing their virtual calibration data to the calibration data of sounding rockets, the machine learning program was perfect.

With this new process, researchers are ready to continuously calibrate AIA images between calibration rocket flights, thus improving the accuracy of SDO data for researchers.

Machine learning beyond the Sun

The researchers also used machine learning to better understand conditions closer to home.

A group of researchers led by Dr. Ryan McGranaghan – senior data scientist and aerospace engineer at ASTRA LLC and NASA’s Goddard Space Flight Center – used machine learning to better understand the connection between Earth’s magnetic field and the ionosphere, the electrically charged part of the top of the Earth. atmosphere. Using data science techniques for large volumes of data, they could apply machine learning techniques to develop a more recent model that helped them better understand how energized particles from space rain down in the air. Earth’s atmosphere, where they conduct space weather.

As machine learning advances, its scientific applications will expand into more and more missions. Looking to the future, this may mean that deep space missions – which go to places where calibration rocket flights are not possible – can still be calibrated and continue to provide accurate data, even in moving further and further away from Earth or any star.

Reference: “Multichannel Autocalibration for Atmospheric Imaging Assembly Using Machine Learning” by Luiz FG Dos Santos, Souvik Bose, Valentina Salvatelli, Brad Neuberg, Mark CM Cheung, Miho Janvier, Meng Jin, Yarin Gal , Paul Boerner and Atılım Güneş Baydin, April 13, 2021, Astronomy & Astrophysics.

DOI: 10.1051 / 0004-6361 / 202040051

[ad_2]

Source link