[ad_1]

(Image: Deep Mind)

Deep Mind led Kis to play Quake 3 Capture-The-Flag. Machines play better than humans.

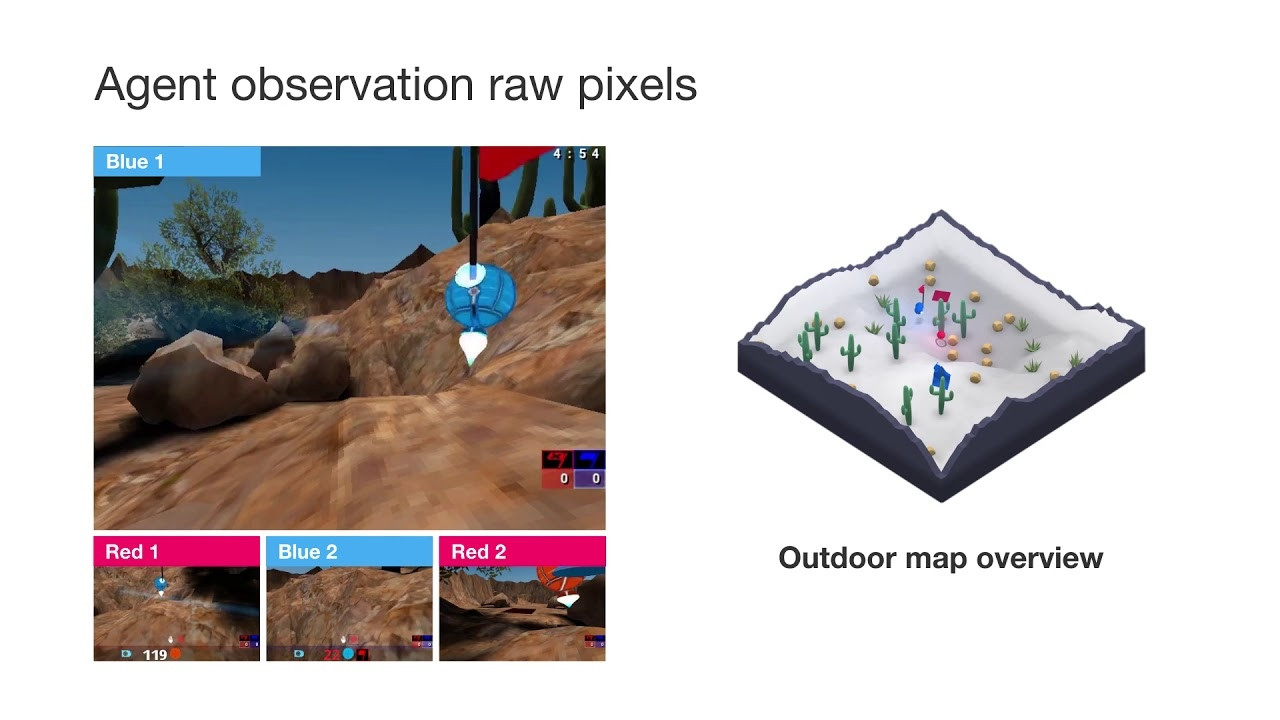

Following their superhuman Go-KI, Google's Deep Mind program now plays Capture-The-Flag (CTF) better than humans. AI agents simply evaluate the image content calculated by the clbadic Quake 3 shooter and press the virtual controller buttons to control it. Deep Mind has published details on AI on his blog.

Reinforcement Learning …

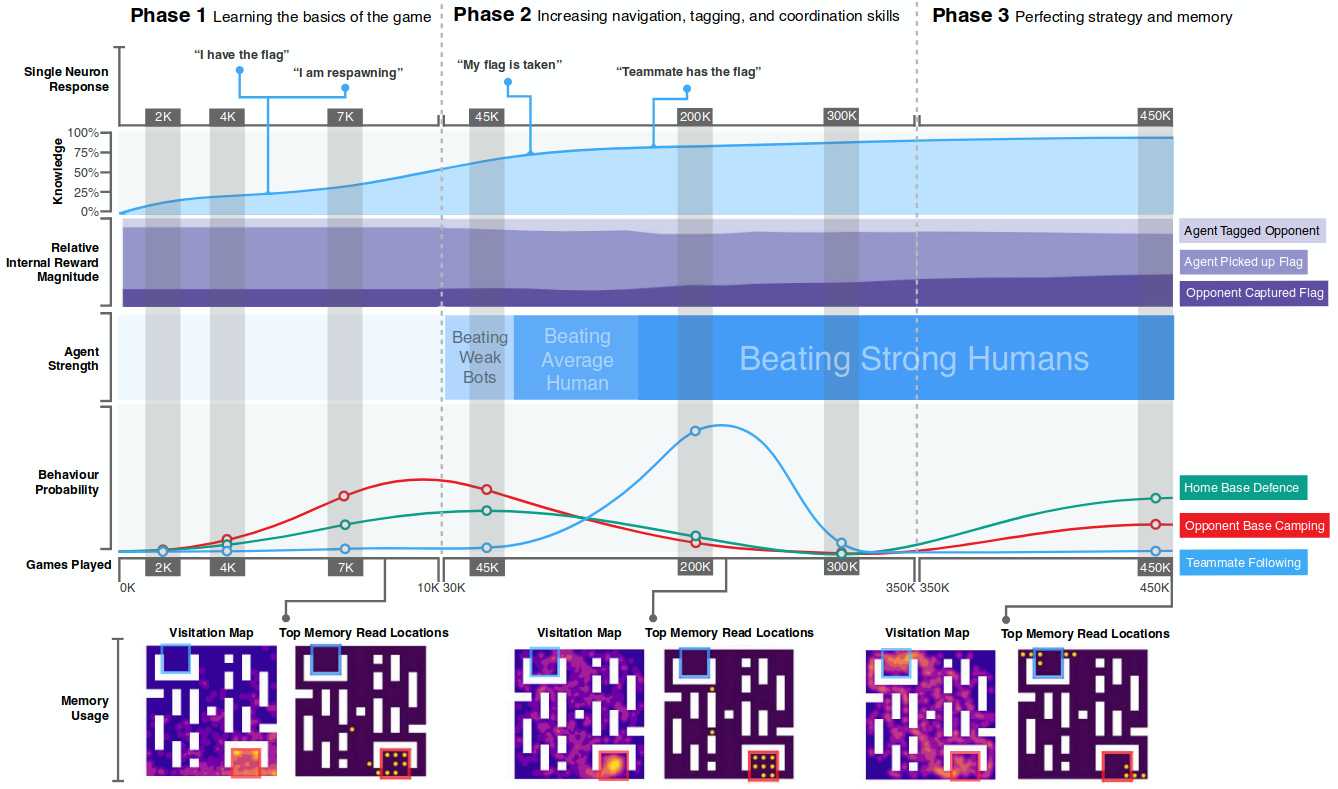

First, AI learns how to move and the basic rules of the game. In a second phase, she learns to find her way around the map. In the third phase, she learns cooperation and tactics.

(Image: Human-scale performance in first-person multiplayer games with in-depth learning by the population, Jaderberg et al., 2018)

In principle, Deep Mind's Quake-KI works like most reinforcement-based training agents based on neural networks. A convolutive multilayer network badyzes the content of the image and creates a vector that a recurring network uses as input. This calculates the probabilities of how it is advisable to press certain buttons and tries to predict the actions to expect from a reward. In training, you reward the network when it wins a game.

… with memory

The usual agents of this type lack foresight for effective strategies. That's why the AI of Deep Mind uses differentiable neural computers as long-term memory. The researchers were able to show that the AI in this memory stores useful information about the map and tactics. Thus, some vectors seem to encode the distance to the opposing base, or if their own team is currently in possession of the flag. The behavior of the agents shows that, for example, they adapt their behavior if the opposing team has its own flag.

Virtual Evolution

The operation of these agents depends on many hyperparameters, not the deep mind. optimized. Instead, they created a population of different agents and, using an evolution algorithm, only survived the agents who played particularly well. The hyper-parameters of the best neural networks have mutated again and again to produce better KIs over time.

Players measured the strength of the game with the ELO score known from chess tournaments. AIs were particularly effective against nearly equal opponents.

Tournament with and against humans

After training against other AIs, Deep Mind organized a tournament in which AI competed with experienced human players. However, they were able to beat only a few braces for the IA teams

Deep Mind also had mixed teams and KIs competing. They played surprisingly well together. In a survey, human players even said that AI players were more cooperative than their human counterparts.

With players playing in higher resolutions than AIs, players were much better off. At short distances, however, the accuracy and reaction time of AI were significantly higher than the human level. Deep Mind has artificially reduced these levels to the level of people without having to re-train the AI. But even then, KIs could still beat people, but with a smaller lead.

(jme)

Source link