[ad_1]

Roundup Hello, welcome to this week's rally in AI. The machines send us spooky posts on Google Translate, Facebook is recruiting more academics to start new labs and some fools decide to get on a car without a driver in California.

AI sends us secret apocalyptic messages: Google? Jesus will come back when the Doomsday clock strikes twelve hours, you say? Hmm.

Folks recently spotted weird messy messages trying to translate seemingly harmless words using Google Translate.

If you type, for example, in "dog" 18 times and you translate it from Yoruba into English, Google gives "Doomsday Clock has three minutes to twelve o'clock.We live characters and dramatic developments in the world, which indicate that we are coming closer and closer to the end times and the return of Jesus. "

O … kay, Google … Click to enlarge

O … kay, Google … Click to enlarge

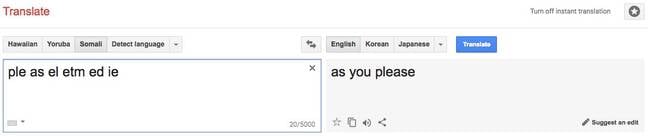

This is not the only small problem. By adding odd spaces between words, Google Translate goes crazy. Some translations are rather dark. Ask him to translate "ple as el m ém edie" from Somali into English, and you will receive the mysterious message "As you please."

Click to enlarge

Click to enlarge

Google has redesigned its online translation services using a neuron-based machine translation model, an AI system that uses natural language to encode and decode words in different languages. languages. He can not find something that he has never been exposed to before. Judging by some of the automatic translations, it is very possible that the model receives passages from Bibles and similar material

This makes sense because the Christian Bible is probably one of the most translated texts in the world . In other words, it is a good idea to train an AI using texts translated into several languages, so that the neural network can connect the words in different languages by their common meaning. The Bible, available in several languages, is a relatively good example of such a text.

Problems are more likely to appear with obscure languages because the training data for, say, Yoruba to English and Somali to English, should be rather sparse. . Thus, regardless of the data sets that Google uses (bibles, novels, books, web pages crawled, etc.), there will not be much knowledge for machine learning to continue. . Thus, when they are confronted with difficult passages to translate, the underlying learning data are likely to be exposed in a global and unexpected way.

Nobody, not even Google engineers, really knows how to decode these neural networks. , weird things like that are always possible and will continue to happen. Either way, it seems that Google has adjusted its translation code to prevent it from throwing up at least some of the scary omens – for now.

Autonomous Vehicle Accident Reports: GM Cruise recently filed a report at the DMV in California after a pedestrian stepped on the hood of one of his cars' d & # 39; Red fire test.

It's interesting to see what one of these reports looks like. "A self-driving autonomous cruising vehicle (" Cruise AV ") was involved in an incident on Sutter Street, at the intersection of Sansome Street, when a jaywalking pedestrian was approached the AV Cruise. and intentionally mounted on the vehicle hood while the Cruise AV was stopped at a red light, resulting in a bump on the hood. The pedestrian then left and went away. A new robotics laboratory for Facebook: Facebook announced that new academics were joining the social media giant to open research centers, including one for robotics.

Jessica Hodgins, professor of robotics at Carnegie Mellon University, will split her time between academia and lead a new IA Research Facebook lab in Pittsburgh. She is accompanied by Abhinav Gupta, a robotics professor also associated with Carnegie Mellon. But the team will focus on "robotics, lifelong learning systems that are learning continuously for years, teaching machines to reason and intelligence artificial in the service of creativity ". According to a blog post

Luke Zettlemoyer, an associate professor specializing in the processing of natural language at the University of Washington, who joined FAIR's laboratory in Seattle, is also a member of the academic community. Andrea Vedaldi, associate professor at Oxford University, and Jitendra Malik, both will be doing computer vision research for FAIR in London and Palo Alto.

OpenAI launches a new challenge Dota: OpenAI announces another competition to fight former professionals Dota players with his bots OpenAI Five

OpenAI has slowly increased the difficulty of the challenge. At first, it was a mirror match – where all the opposing heroes had to be the same – in a 1V1 match. Last month, OpenAI Five won the mirror 5V5 matches

Now, OpenAI wants its engine to face the semi-pros with even fewer restrictions. There will be a pool of 18 heroes to choose from and no matching mirror. Some items such as rapier and Divine bottle are still forbidden, and robots will not be able to use Scan, a movement that allows players to detect nearby enemies.

The reaction time of robots has also been increased from 80ms to 200ms so that they have less benefit. But it seems like they'll still have the huge advantage of being able to see the whole map at some point, which humans can not do because they have to manually move their heroes around the map.

will take place in the OpenAI office in San Francisco on August 5th.

Speaking of OpenAI … The org published a reversible generative model, called Glow, described here with open-source code here. It can be used to, for example, change things like smiles, age signs, eye size and hair color on face photos

a hardware company known for its FPGAs acquired DeepPhi Tech.

The financial details of the acquisition were not disclosed. DeepPhi is partnering with Xilinx to adapt its FPGA chips to accelerate the training and inference steps for neural networks.

"FPGA-based deep learning accelerators meet most requirements," explained to our sister site The Next Platform . "They have acceptable power and performance, they support a custom architecture and have high bandwidth on the chip and are very reliable."

DeepPhi seeks to optimize long-term memory networks and convolutional neural networks with natural language processing and computer vision tasks.

You can read more about this here. ®

Source link